[ad_1]

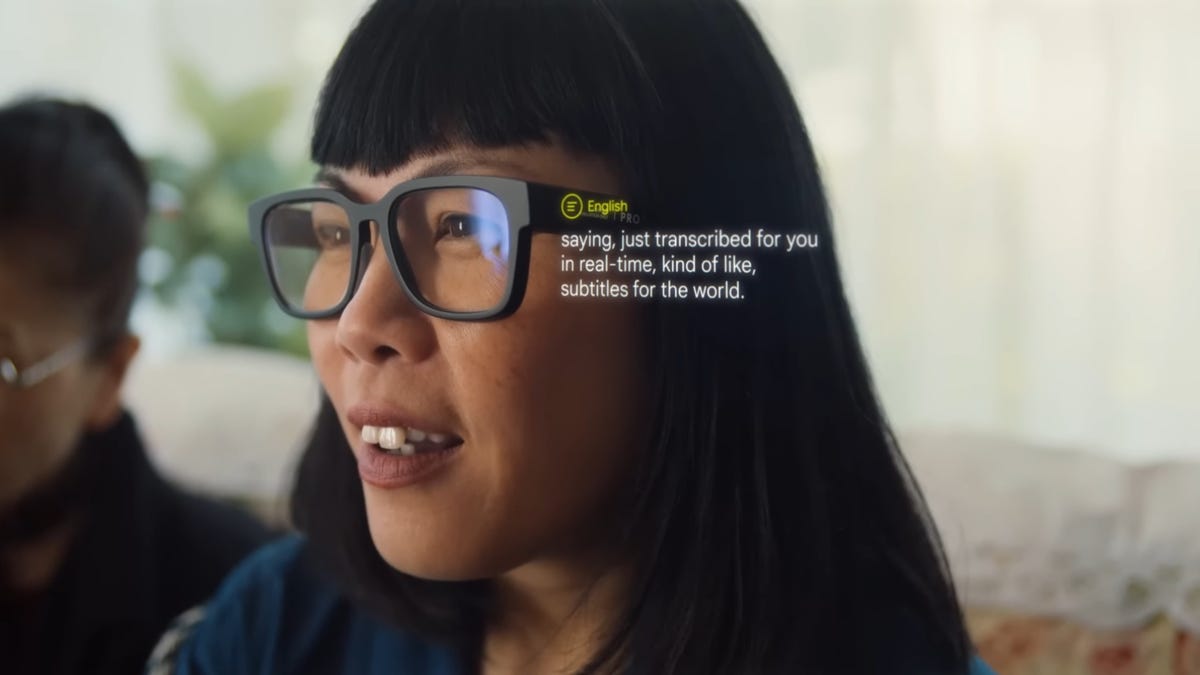

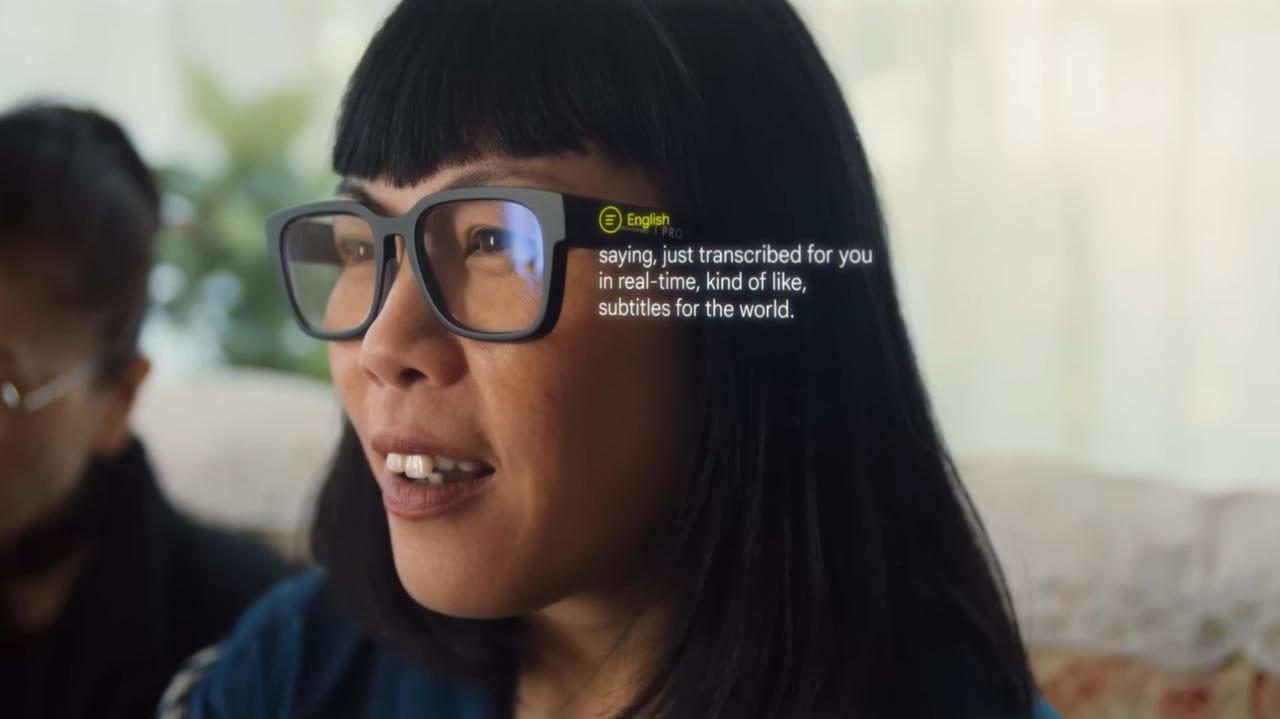

Exactly one year ago, Google unveiled a pair of augmented reality (AR) glasses at its I/O developer conference. But unlike Google Glass, this new concept, which didn’t have a name at the time (and still doesn’t), demonstrated the practicality of digital overlays, promoting the idea of real-time language translation as you were conversing with another person.

It wasn’t about shooting magic spells or seeing dancing cartoons but rather providing accessibility to something we all do every day: communicating.

Also: How to join the Google Search Labs waitlist to access its new AI search engine

The concept had the appearance of a regular pair of glasses, making it clear that you didn’t have to look like a cyborg in order to reap the benefits of today’s technology. But, again, it was just a concept, and Google hasn’t really talked about the product since then.

Twelve months have passed and the popularity of AR has now been replaced by another acronym: AI, shifting most of Google and the tech industry’s focus more toward artificial intelligence and machine learning and further away from metaverses and, I guess, glasses that help you transcribe language in real time. Google literally said the word “AI” 143 times during yesterday’s I/O event, as counted by CNET.

But it was also during the event that something else caught my eye. No, it wasn’t Sundar Pichai’s declaration that hotdogs are actually tacos but, instead, a feature that Google briefly demoed with the new Pixel Fold. (The taco of smartphones? Nevermind.)

The company calls it Dual Screen Interpreter Mode, a transcription feature that leverages the front and back screens of the foldable and the Tensor G2’s processing power to simultaneously display what’s being spoken by one person and how it translates in another language. At a glance, you’re able to understand what someone else is saying, even if they don’t speak the same language as you. Sound familiar?

I’m not saying a foldable phone is a direct replacement for AR glasses; I still believe there’s a future where the latter exists and potentially replaces all the devices we carry around. But the Dual Screen Interpreter Mode on the Pixel Fold is the closest callback we’ve gotten to Google’s year-old concept, and I’m excited to test the feature when it arrives.

Also: All the hardware Google announced at I/O 2023 (and yes, there’s a foldable)

The Pixel Fold is available for pre-order right now, and Google says it will start shipping by next month. But even then, you’ll have to wait until the fall before the translation feature sees an official release, so stay tuned.

[ad_2]

Source link