[ad_1]

The Segment Anything Model (SAM) is a newer proposal in the field. It’s a vision foundation concept that’s been hailed as a breakthrough. It may employ multiple possible user involvement prompts to segment any object in the image accurately. Using a Transformer model that has been extensively trained on the SA-1B dataset, SAM can easily handle a wide variety of situations and objects. In other words, Segment Anything is now possible thanks to SAM. This task has the potential to serve as a foundation for a wide variety of future vision challenges due to its generalizability.

Despite these improvements and the promising results of SAM and subsequent models in handling the segment anything task, its practical implementations still need to be improved. The primary challenge with SAM’s architecture is the high processing requirements of Transformer (ViT) models contrasted with their convolutional analogs. Increased demand from commercial applications inspired a team of researchers from China to create a real-time answer to the segment anything problem; researchers call it FastSAM.

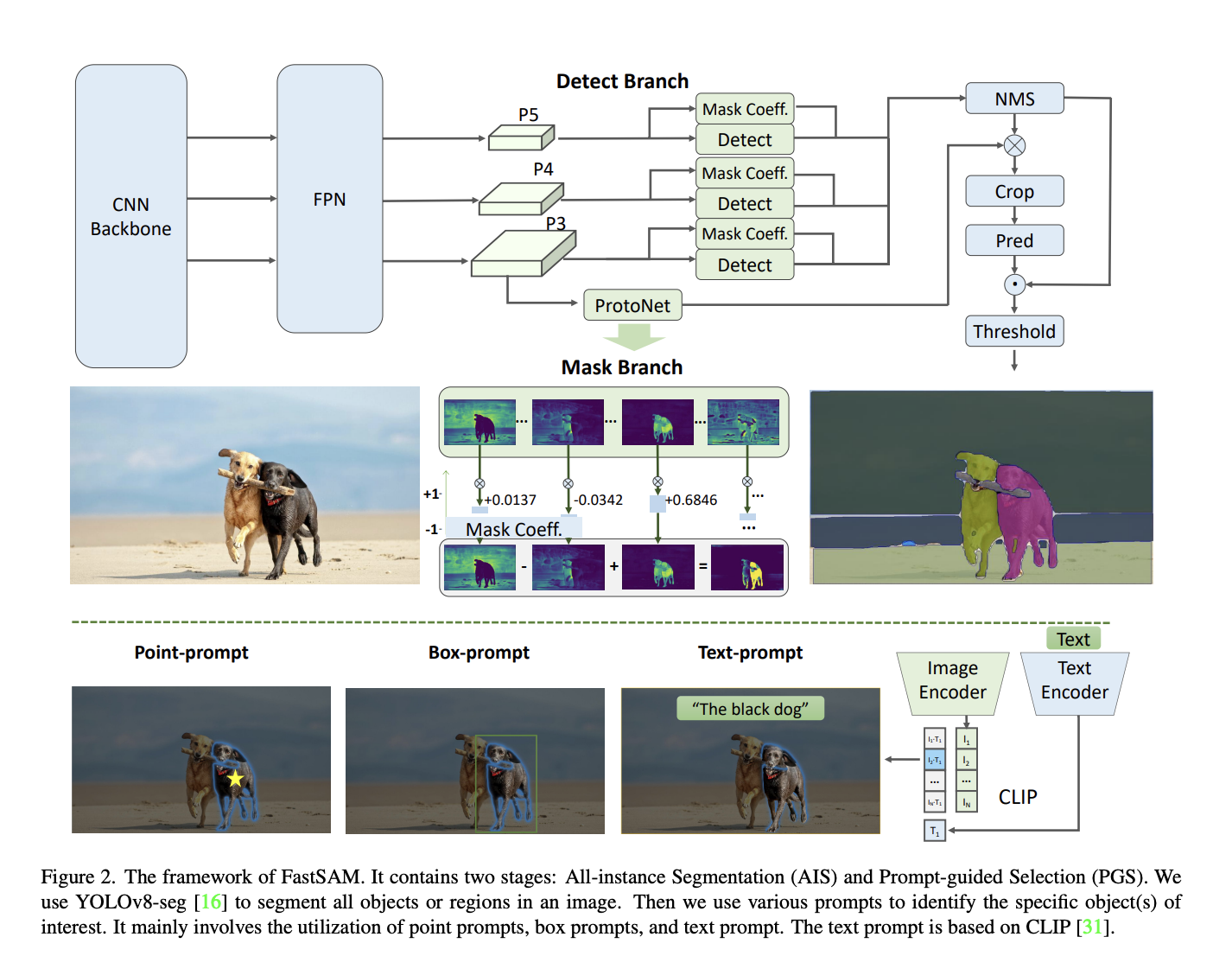

To solve this problem, researchers split the segment anything task into two parts: all-instance segmentation and prompt-guided selection. The first step depends on using a detector based on a Convolutional Neural Network (CNN). Segmentation masks for each instance in the image are generated. The second stage then displays the matching region of interest to the input. They show that a real-time model for any arbitrary data segment is feasible using the computational efficiency of convolutional neural networks (CNNs). They also believe our approach could pave the way for the widespread use of the fundamental segmentation process in commercial settings.

Using the YOLACT approach, YOLOv8-seg is an object detector that forms the basis of our proposed FastSAM. Researchers also use SAM’s comprehensive SA-1B dataset. This CNN detector achieves performance on par with SAM despite being directly trained using only 2% (1/50) of the SA-1B dataset, allowing for real-time application despite significantly decreased computational and resource constraints. They also demonstrate its generalization performance by applying it to various downstream segmentation tasks.

The segment-anything model in real-time has practical applications in industry. It has a wide range of possible uses. The suggested method not only offers a novel, implementable answer to a wide variety of vision tasks but also at a very high speed, often tens or hundreds of times quicker than conventional approaches. The new perspectives it provides on large model architecture for general vision problems are also welcome. Our research suggests that there are still cases where specialized models offer the best efficiency-accuracy balance. Our method then demonstrates the viability of a route that, by inserting an artificial before the structure, can greatly minimize the computational cost required to run the model.

The team summarizes their main contributions as follows:

- The Segment Anything challenge is addressed by introducing a revolutionary, real-time CNN-based method that drastically decreases processing requirements without sacrificing performance.

- Insights into the potential of lightweight CNN models in complicated vision tasks are shown in this article, which includes the first research of applying a CNN detector to the segment anything challenge.

- The merits and shortcomings of the suggested method in the segment of anything domain are revealed through a comparison with SAM on various benchmarks.

Overall, the proposed FastSAM matches the performance of SAM while being 50x and 170x faster to execute, respectively. Its fast performance might benefit industrial applications, such as road obstacle identification, video instance tracking, and picture editing. FastSAM can produce higher-quality masks for huge objects in some photos. The suggested FastSAM can fulfill the real-time segment operation by selecting resilient and efficient objects of interest from a segmented image. They conducted an empirical investigation comparing FastSAM to SAM on four zero-shot tasks: edge recognition, proposal generation, instance segmentation, and localization with text prompts. Results show that FastSAM is 50 times faster than SAM-ViT-H in running time and can efficiently process many downstream jobs in real-time.

Check Out the Paper and Github Repo. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at [email protected]

Featured Tools:

🚀 Check Out 100’s AI Tools in AI Tools Club

![]()

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.

[ad_2]

Source link