[ad_1]

The innovations that AI makes possible can seem downright miraculous. But as a woman on Reddit recently learned, the technology comes with a disturbing underbelly.

That dark side includes downright dangerous violations of privacy — a problem that is not only far more widespread than most of us realize, but one against which the law provides virtually no protection.

A woman discovered her husband has been using AI to generate ‘deepfake porn’ photos of her friends.

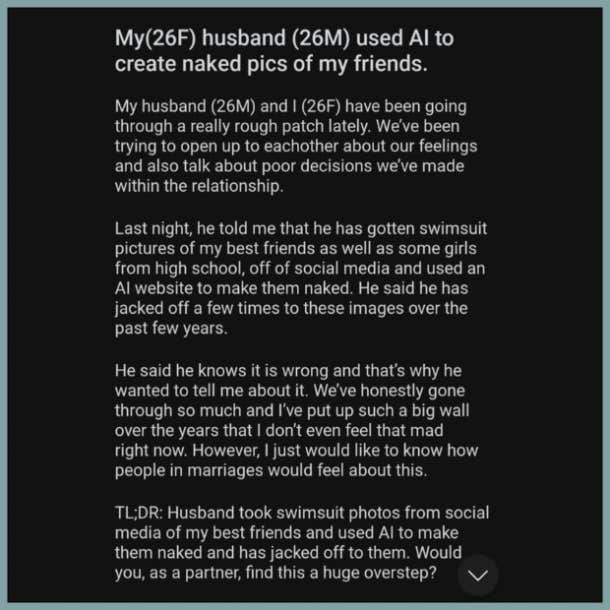

In her Reddit post, the woman detailed how she and her husband have been “going through a really rough patch lately.” In an effort to salvage their marriage, they’ve been digging into tough conversations, including those about “poor decisions we’ve made within the relationship.”

During one of those talks, her husband revealed he’d engaged in behavior that would not only constitute infidelity to many people but is also a disturbing breach of several women’s privacy — creating AI porn images from photos of her friends.

Her husband revealed that he has been using AI-generated nude photos for sexual pleasure.

“Last night, he told me he has gotten swimsuit pictures of my best friends as well as some girls [with whom I went to] high school, off of social media and used an AI website to make them naked,” she writes.

Her husband has been doing this for years and has been doing far more than just looking at them. “He said he has jacked off a few times to these images over the past few years,” she writes.

Photo: @Ask_Aubry/Twitter

She goes on to say that her husband “knows it is wrong and that’s why he wanted to tell me about it,” but his honesty was of little comfort to her or other people on Reddit.

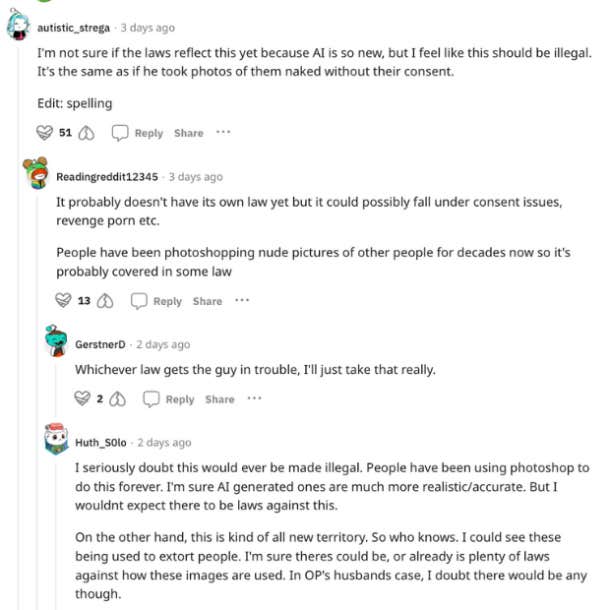

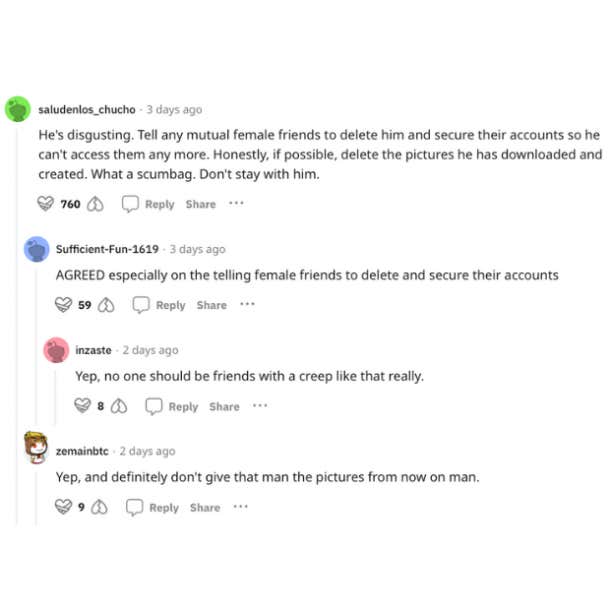

The woman’s fellow Redditors were deeply disturbed by her story, with many pointing out that not only is this a violation of privacy, but one with the potential to ruin women’s lives if the images got into the wrong hands. Many also thought that the woman’s husband’s activity cannot possibly be legal.

Photo: Reddit

But while it seems like a matter of common sense that making porn out of private people’s content without their consent cannot possibly be legal, it turns out that the law and the pace of technology have been running at very different speeds.

AI-generated sexual images and videos, so-called ‘deepfake porn,’ has become an astonishingly widespread problem — and the law has not kept up.

Sadly, the Redditor’s friends are not even remotely alone when it comes to having their personal photos and videos transformed into pornography without their consent.

As detailed in the video below, a 2019 report by deepfake detection software company Sensity found that 96% of AI-created deepfakes were non-consensual porn — orders of magnitude more than the fakes of politicians and celebrities more commonly thought to be the bulk of the AI deepfake problem.

The explosion since then of AI-generated art, memes, filters for social media apps like TikTok — to say nothing of ChatGPT — makes that figure even more bracing. It’s especially disturbing given that, despite how dangerously this content violates people’s privacy and opens them to damage to their reputations, careers, and relationships, the people impacted have next to no legal recourse whatsoever.

Photo: Reddit

Only three states have laws about so-called “deepfake porn” on the books even as the practice of creating it has exploded. Even copyright claims are no match for AI.

The US Copyright Office has so far ruled that AI-generated art cannot be copyrighted, and the law does not provide firm recourse even for stars like Drake and The Weeknd, whose voices were famously used in an AI-generated song they neither wrote nor sang.

So when it comes to regular rank-and-file people falling victim to their likenesses being hijacked for sexual content, the law’s protections are even weaker. While many states and countries have laws about revenge porn, the vast majority lag behind in including AI-generated content and deepfakes within their scope.

Thankfully, though, this soon may change. In May of 2023, Democratic New York Representative Joe Morelle introduced the Preventing Deepfakes of Intimate Images Act, Congressional legislation he says will send a message to AI porn creators like the man described in this Reddit post that “they’re not going to be able to be shielded from prosecution potentially. And they’re not going to be shielded from facing lawsuits.”

John Sundholm is a news and entertainment writer who covers pop culture, social justice and human interest topics.

[ad_2]

Source link