[ad_1]

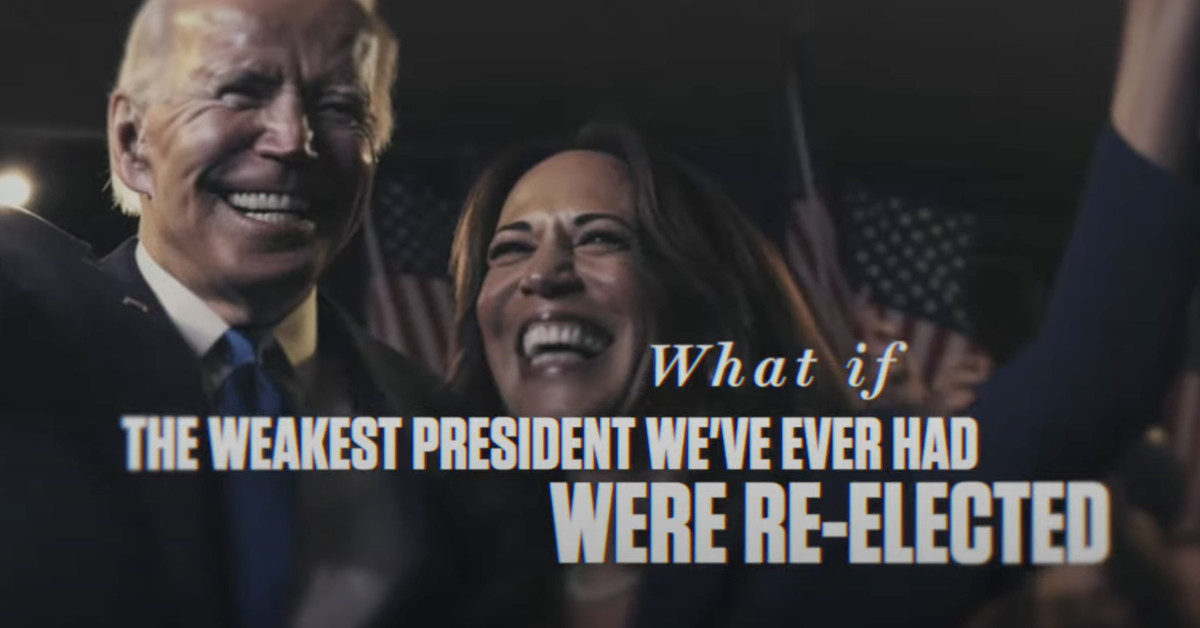

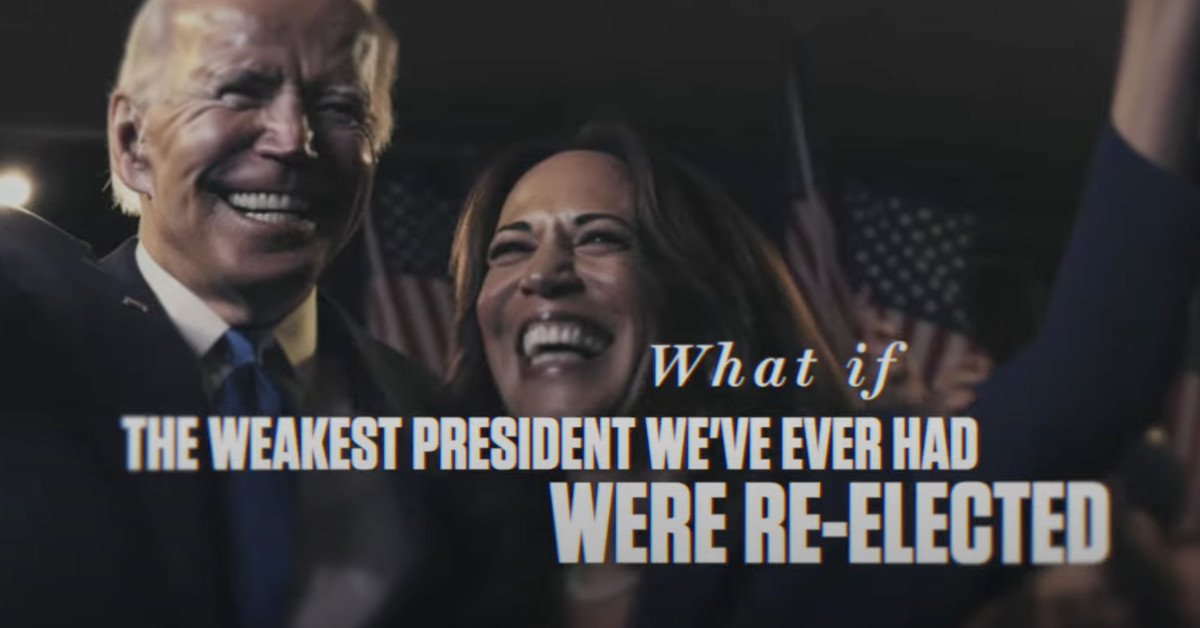

Joe Biden announced his reelection campaign this morning, and the Republican National Committee (RNC) has responded with an attack ad featuring AI-generated images.

The ad contains a series of stylistic images imagining Biden’s reelection in 2024. It suggests this will lead to a series of crises, with images depicting explosions in Taiwan after a Chinese invasion and military deployments on what are presumably US streets.

A small disclaimer in the top left of the frame says “built entirely with AI imagery,” while the caption underneath the YouTube video reads, “An AI-generated look into the country’s possible future if Joe Biden is re-elected in 2024.” A spokesperson for the RNC told Axios it was the first ad of its kind put out by the organization.

It’s not clear what tools were used to create the images, nor whether doing so would have violated any terms and conditions. A number of high-profile AI image generators like Midjourney and DALL-E limit the creation of overtly political images. (Midjourney, for example, won’t let you generate pictures of Xi Jinping to “minimize drama” and has banned the use of the term “arrested.”) However, most of the images in the ad are fairly generic and could likely be generated without falling foul of any system’s filters.

Other questions raised by the ad are complex and worrying. Many experts have warned that AI-generated deepfakes could be used to spread political misinformation, but what if that misinformation comes from politicians themselves? And how does one draw the line between misinformation and regular campaigning? For example, the RNC could have decided to make the ad about Biden’s age and included AI-generated images of him in a wheelchair. Viewers might well mistake the pictures for real photographs, especially if the disclaimers are as small as they are in today’s ad.

If politicians embrace deepfakes, they might also have a freer hand sharing such content online. In 2020, Facebook and Instagram owner Meta banned AI-generated content likely to mislead viewers, but the company refused to answer questions about whether that rule also applies to politicians, who are already exempt from fact-checking.

[ad_2]

Source link