[ad_1]

![]()

There’s a reason why my iPhone is often the only camera I’ll carry with me. Its imaging quality allows me to take good photos without having to carry any additional gear.

My DSLR still beats my smartphone considerably in terms of quality, but I don’t mind the quality loss in lots of non-professional scenarios. There’s a lot more happening besides good optical quality in my smartphone, however, and its camera wouldn’t be great if it didn’t have help from computers.

While DSLR and mirrorless technology is evolving, recent photography has seen some of its most revolutionary changes in places like the smartphone and compact-camera worlds. Since there are size and cost limits to the imaging hardware that can be used in these cameras, innovation has come in the form of computational imaging processes. With recent rises in the accessibility of artificial intelligence, computational photography is evolving and will continue to grow. This article will provide an overview of computational photography and why it matters in many different contexts.

What is Computational Photography?

If you’ve never heard the term before, you’re not alone. Computational photography uses computing techniques such as artificial intelligence, machine learning, algorithms, or even simple scripts to capture images. This is usually in conjunction with or after optical image capture. Although it might sound obscure, nearly every smartphone camera uses computational photography in some way. Without it, smartphone imaging capabilities would be much lower.

Examples of Computational Photography

Chances are good that you’ve used computational photography if you own a smartphone. If not, you’ve probably seen people use it in lots of different ways.

Portrait mode on a smartphone

In most professional cameras, the portrait-looking effect comes from using a wide lens aperture. This creates a shallow depth of field and, without getting too complicated, blurs the background of the image.

Most smartphone cameras have fixed apertures or apertures that don’t open wide enough to naturally create the portrait-esque blur. This would be really hard and expensive to implement using hardware, so smartphone manufacturers use computational photography to create a simulated background blur. Portrait mode recognizes subjects and essentially overlays a blurry filter on the background. This uses computational processes to recognize, isolate, and focus on the subject in the image in real-time.

Panorama modes on smartphones and cameras

Many smartphones and newer cameras have built-in panorama modes. With the push of a button, the imaging device will direct the photographer to move and keep their camera along a straight line so that the device can take multiple images and stitch them in near real-time. This creates a panorama in-camera, rather than having to manually stitch the images.

Computational photography is at work guiding the photographer, stitching the images, and creating a single panorama file that can be viewed instantly. Since most smartphones and smaller cameras have electronic shutters with minimal moving parts, the shutters can be activated very rapidly, allowing for quick panorama capture.

High dynamic range (HDR) modes on smartphones and cameras

With the press of one button, this feature uses algorithms and even machine learning to recognize the brightest and darkest parts of a scene. The camera will take photos at different exposures and combine them seamlessly, leading to a final product with detail in the brightest and darkest parts.

This all happens quickly in most smartphones because a smartphone camera is always imaging when the camera app is in use. Otherwise, the live “preview” couldn’t be shown. Only the images that you press the shutter button for are saved to your device’s photo album, but there is always a buffer of photos that your smartphone hangs onto for a little bit and then discards. This is how Live Photo works in iPhones, and this is why you will probably see movement from before you actually pressed the shutter button in any Live Photo. In smartphones, the shutter button is very much like a “pause and save” button in a continuous imaging stream.

![]()

Daytime “long exposures” on your smartphone

Similar to Apple’s Live Photo feature, many smartphones offer a feature to actually stack multiple buffered images to create a long exposure effect. This is a similar concept to time-stacking, which many landscape photographers use to get long exposures of water when it’s too bright to take a long exposure. The technique involves taking many shorter exposures and combining all of the imaging data using post-processing. Smartphones will use computational photography to align and combine shots from the buffer and create a long exposure effect.

How to do this on an iPhone: First, take a Live Photo in your Camera app. Then, find the image in your Photos app and click on the icon that says “Live” in the upper left corner. The Long Exposure button will combine all of the buffered images that comprise the Live Photo into one stacked image.

![]()

Night mode in many recent camera systems

Images at night are hard to make because there’s not enough light to create a well-exposed image with good contrast. In professional cameras, slow shutter speeds, wide apertures, and high ISOs help, but those usually require a tripod to minimize camera shake. Most people don’t want to use their smartphone camera with a tripod.

Many recent smartphone manufacturers have implemented a similar process to the buffering and stacking outlined above, which takes shorter exposures (minimizing motion blur) and combines them to create an overall higher-quality and brighter image because there is an increase in the total amount of data captured. If you combine that technique with images that are brighter and darker to capture more dynamic range (which some smartphone cameras do), you get images that look like they might have been taken with a DSLR when they were really taken with a tiny lens and sensor.

Computational Photography Uses Many Different Processes

This article is simply providing an introduction to computational photography, so the technical details of algorithms and other techniques are beyond its scope. However, there are some really interesting techniques being used in the context of photography.

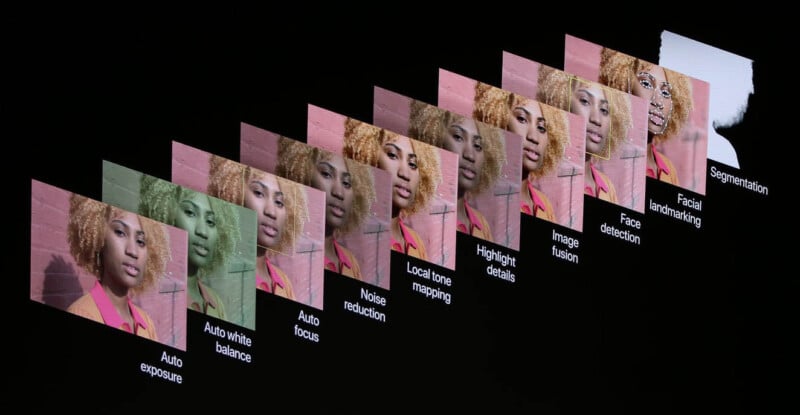

Neural networks and machine learning

In many recent cases, manufacturers have implemented neural networks (basically, simulated brains that use “neurons” to “think”) in computational photography. By showing artificial intelligence images that are too bright, too dark, or discolored, the system will be able to recognize when an image has those characteristics and attempt to fix it. Although it won’t always be perfect, the billions of images that are made each day make for a huge database to use to teach systems how to judge and correct images.

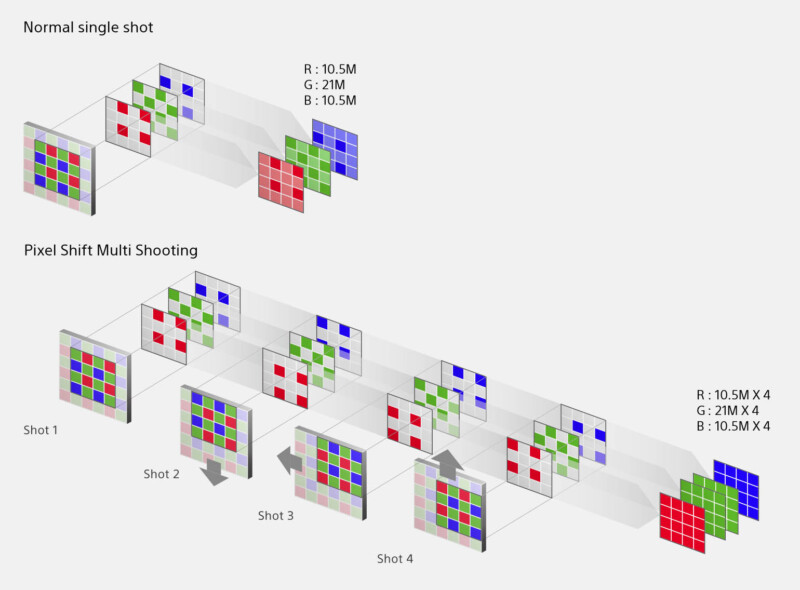

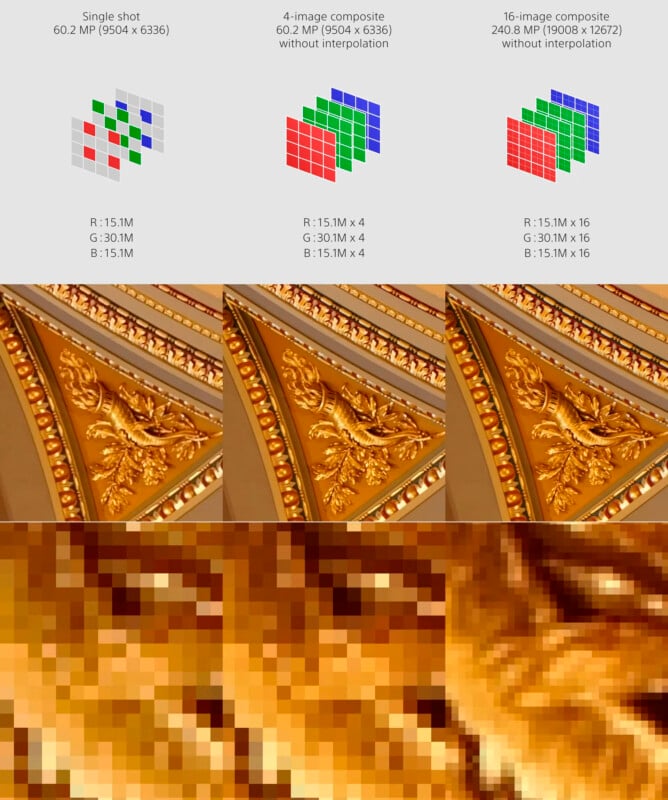

Pixel shifting

In computational photography, image-making is often an additive process. In other words, one image is rarely the product of a single image. Rather, an image is a combination of many different images with different parameters. Pixel shifting is another example of this. This is often available in smartphones and very high-resolution mirrorless cameras. This process physically (using a mechanism or making use of naturally shaky hands) shifts the sensor one pixel at a time. By combining multiple images just a pixel apart, there is more overall information captured.

The exact means for how this happens is beyond the scope of this article, but the important thing is that this is all made possible by computer processes to shift, combine, and output one higher-resolution, sharp image.

Pixel binning

Very basically, pixels help capture light, and larger pixels capture more light. However, smartphone cameras need to be small, and therefore their pixels need to be small. Pixel binning allows the data from four pixels to be combined into one, which increases the overall quality of the image without sacrificing low-light capabilities. Without computational photography, this technology wouldn’t be as accessible.

Focus stacking

This is fairly simple and used to be a very laborious process that only extreme macro photographers would do often since they work with extremely shallow depths of field. This involves taking different images at different focal points and combining them to create one image with greater depth of field and detail. Smartphones and other cameras will do this automatically with the click of a single button and use computer algorithms to align and stack the images in seconds.

Why Does Computational Photography Matter?

Manufacturers are finding ways to make 12-megapixel sensors produce similar results to 50-megapixel sensors (although there are still limitations). This is all because of the power of algorithms, machine learning, scripts, and other computer-enabled processes that maximize the imaging capabilities of traditionally lower-grade hardware.

It’s not a perfect analogy, but a professional racecar driver could probably beat a novice driver in a race even if they swapped cars. The racecar driver, just like computational photography, uses skills to make the most out of the lower-grade hardware, while the novice driver has the hardware and lacks the skills. Computer processes are quick and efficiently taught, and they are being developed to maximize hardware that would typically not give great results. This lowers materials cost for expensive hardware and makes more possible with what we already have.

In some ways, this is a frightening aspect of modern photography. Traditionally, photographers have been championed because they have the knowledge, equipment, and experience to create stunning images in many different environments. If computational photography makes it possible to do more with less hardware, photographers need to compete with everyone who has a smartphone. There will always be the artistic side of photography, however, which will belong to photographers for at least a little bit longer. The rise of AI has brought important questions to the art world, and photography is no exception.

Computational photography is also making its way into the professional photography world. DSLRs and mirrorless cameras are seeing changes in autofocus systems that involve AI subject-identification, HDR, panorama, and other processes described above. It’s not just a smartphone thing, anymore, and technology is changing – for better or worse.

Conclusion

We’ve come a long way from loading film into a camera and advancing it to get to the next shot. Significant advances in computer technology have recently made their way into smartphones and other image-making devices, something that many would’ve never thought possible.

Computational photography has traditionally been used to make high-quality photographs using hardware that would otherwise not produce such quality, and it’s making its way to professional cameras. Regardless of anyone’s opinion on the innovation happening today, it’s important to stay informed on technology and how imaging processes work so that photographers can use the tools available to them.

Image credits: Header photo by Ted Kritsonis for PetaPixel

[ad_2]

Source link