[ad_1]

Sentiment analysis is a natural language processing (NLP) technique that identifies the attitude behind a text. It is also known as opinion mining. The goal of sentiment analysis is to identify whether a certain text has positive, negative, or neutral sentiment. It is widely used by businesses to automatically classify the sentiment in customer reviews. Analyzing large volumes of reviews helps gain valuable insights into the customers’ preferences.

Setting Up Your Environment

You need to be familiar with Python basics to follow through. Navigate to Google Colab or open Jupyter Notebook. Then create a new notebook. Execute the following command to install the required libraries in your environment.

! pip install tensorflow scikit-learn pandas numpy pickle5

You will use NumPy and pandas library for manipulating the dataset. TensorFlow for creating and training the machine learning model. Scikit-learn for splitting the dataset into training and testing sets. Finally, you will use pickle5 to serialize and save the tokenizer object.

Importing the Required Libraries

Import the necessary libraries that you will use to preprocess the data and create the model.

import numpy as np

import pandas as pd

import tensorflow as tf

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Embedding, Conv1D, GlobalMaxPooling1D, Dense, Dropout

import pickle5 as pickle

You will use the classes you import from the modules later in the code.

Loading the Dataset

Here, you’ll use the Trip Advisor Hotel Reviews dataset from Kaggle to build the sentiment analysis model.

df = pd.read_csv('/content/tripadvisor_hotel_reviews.csv')

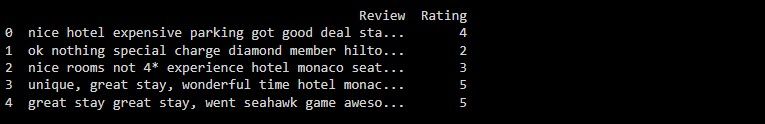

print(df.head())

Load the dataset and print its first five rows. Printing the first five rows will help you check the column names of your dataset. This will be crucial when preprocessing the dataset.

The Trip Advisor Hotel Reviews dataset has an index column, a Review column, and a Rating column.

Data Preprocessing

Select the Review and Rating columns from the dataset. Create a new column based on the Rating column and name it sentiment. If the rating is greater than 3, label the sentiment as positive. If the rating is less than 3, label it as negative. If the rating is exactly 3, label it as neutral.

Select only the Review and sentiment columns from the dataset. Shuffle the rows randomly and reset the index of the data frame. Shuffling and resetting ensure the data is randomly distributed, which is necessary for proper training and testing of the model.

df = df[['Review', 'Rating']]

df['sentiment'] = df['Rating'].apply(lambda x: 'positive' if x > 3

else 'negative' if x < 3

else 'neutral')

df = df[['Review', 'sentiment']]

df = df.sample(frac=1).reset_index(drop=True)

Convert the Review text into a sequence of integers using the tokenizer. This creates a dictionary of the unique words present in the Review text and maps each word to a unique integer value. Use the pad_sequences function from Keras to ensure that all review sequences have the same length.

tokenizer = Tokenizer(num_words=5000, oov_token='<OOV>')

tokenizer.fit_on_texts(df['Review'])

word_index = tokenizer.word_index

sequences = tokenizer.texts_to_sequences(df['Review'])

padded_sequences = pad_sequences(sequences, maxlen=100, truncating='post')

Convert the sentiment labels to One-hot encoding.

sentiment_labels = pd.get_dummies(df['sentiment']).values

One-hot encoding represents categorical data in a format that is easier for your models to work with.

Splitting the Dataset Into Training and Testing Sets

Use scikit-learn to randomly split the dataset into training and testing sets. You will use the training set to train the model to classify the sentiments of the reviews. And you will use the test set to access how good the model is at classifying new unseen reviews.

x_train, x_test, y_train, y_test = train_test_split(padded_sequences, sentiment_labels, test_size=0.2)

The dataset split size is 0.2. This means that 80% of the data will train the model. And the rest 20% will test the model’s performance.

Creating the Neural Network

Create a neural network with six layers.

model = Sequential()

model.add(Embedding(5000, 100, input_length=100))

model.add(Conv1D(64, 5, activation='relu'))

model.add(GlobalMaxPooling1D())

model.add(Dense(32, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(3, activation='softmax'))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

The first layer of the neural network is an Embedding layer. This layer learns a dense representation of words in the vocabulary. The second layer is a Conv1D layer with 64 filters and a kernel size of 5. This layer performs convolution operations on the input sequences, using a small sliding window of size 5.

The third layer reduces the sequence of feature maps to a single vector. It takes the maximum value over each feature map. The fourth layer performs a linear transformation on the input vector. The fifth layer randomly sets a fraction of the input units to 0 during training. This helps prevent overfitting. The final layer converts the output to a probability distribution over the three possible classes: positive, neutral, and negative.

Training the Neural Network

Fit the training and testing sets to the model. Train the model for ten epochs. You can change the number of epochs to your liking.

model.fit(x_train, y_train, epochs=10, batch_size=32, validation_data=(x_test, y_test))

After each epoch, the model’s performance on the testing set is evaluated.

Evaluating the Performance of the Trained Model

Use the model.predict() method to predict the sentiment labels for the test set. Calculate the accuracy score using the accuracy_score() function from scikit-learn.

y_pred = np.argmax(model.predict(x_test), axis=-1)

print("Accuracy:", accuracy_score(np.argmax(y_test, axis=-1), y_pred))

The accuracy of this model is about 84%.

Saving the Model

Save the model using the model.save() method. Use pickle to serialize and save the tokenizer object.

model.save('sentiment_analysis_model.h5')

with open('tokenizer.pickle', 'wb') as handle:

pickle.dump(tokenizer, handle, protocol=pickle.HIGHEST_PROTOCOL)

The tokenizer object will tokenize your own input text and prepare it for feeding to the trained model.

Using the Model to Classify the Sentiment of Your Own Text

After creating and saving the model, you can use it to classify the sentiment of your own text. First, load the saved model and tokenizer.

import kerasmodel = keras.models.load_model('sentiment_analysis_model.h5')

with open('tokenizer.pickle', 'rb') as handle:

tokenizer = pickle.load(handle)

Define a function to predict the sentiment of input text.

def predict_sentiment(text):

text_sequence = tokenizer.texts_to_sequences([text])

text_sequence = pad_sequences(text_sequence, maxlen=100)

predicted_rating = model.predict(text_sequence)[0]

if np.argmax(predicted_rating) == 0:

return 'Negative'

elif np.argmax(predicted_rating) == 1:

return 'Neutral'

else:

return 'Positive'

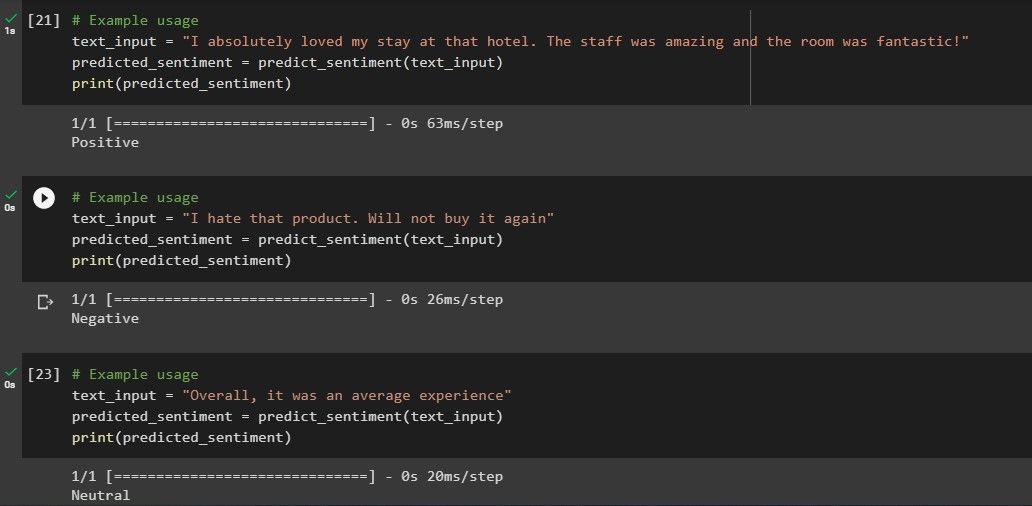

Finally, predict your own text.

text_input = "I absolutely loved my stay at that hotel. The staff was amazing and the room was fantastic!"

predicted_sentiment = predict_sentiment(text_input)

print(predicted_sentiment)

The predicted sentiment of the review above is as follows:

The model is able to classify the sentiments of all three reviews correctly.

Predicting Sentiments Using Pre-Trained Models

Sometimes in machine learning, you may have the challenge of finding the right dataset. You may also be lacking the resources to create your own dataset. This is where pre-trained models come in. You have to know how to use their API and leave them to handle the rest.

[ad_2]

Source link