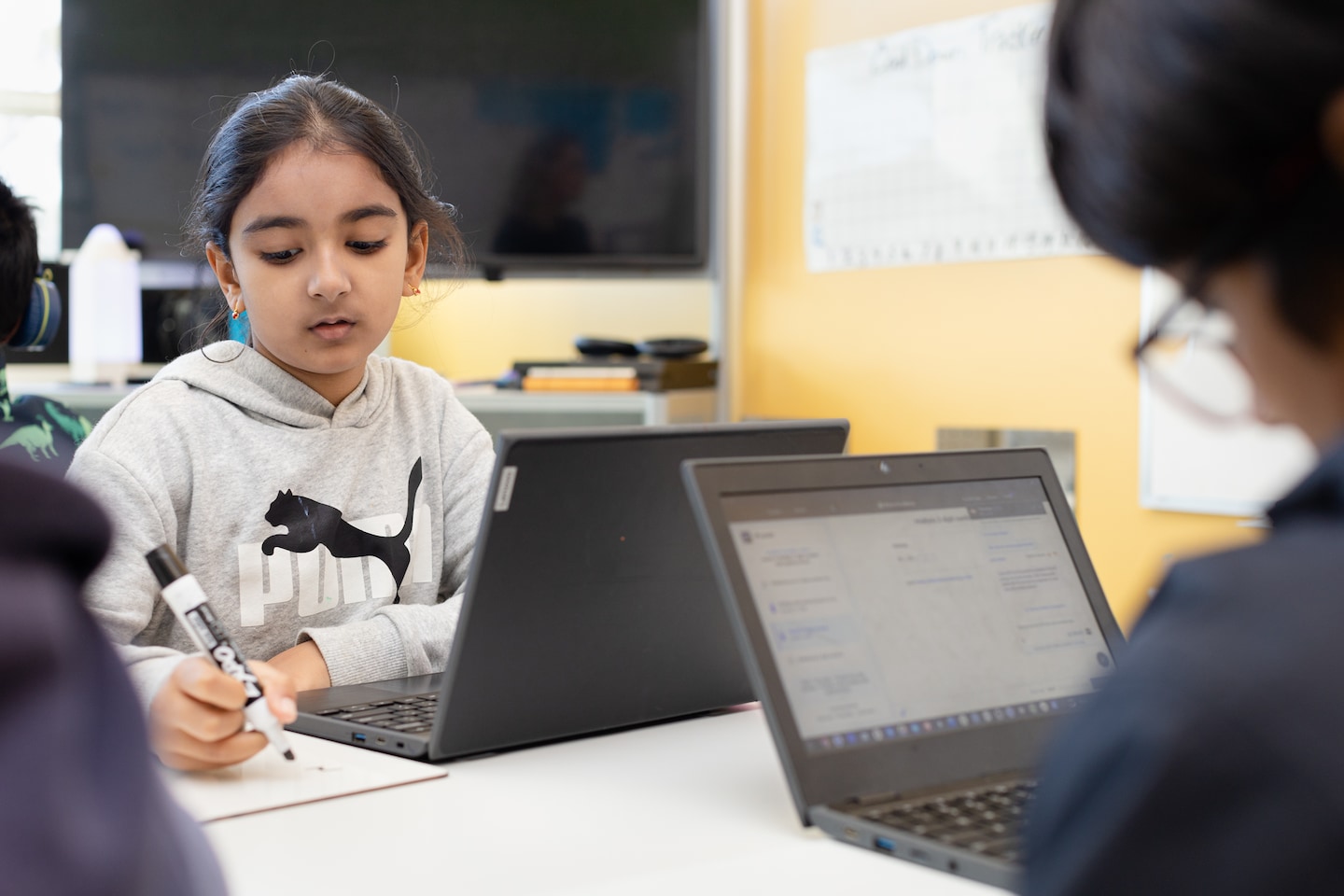

PALO ALTO, Calif. — As second-grader Alisha Agrawal opens her laptop to start a math problem, her chatbot tutor greets her with a request: “I’m still pretty new, so I sometimes make mistakes,” the chatbot, called Khanmigo, says in a pop-up window. “If you catch me making a mistake … press the thumbs down.”

[ad_1]

Alisha’s teacher reiterates this potential for fallibility as she introduces her students to Khanmigo. “Sometimes, it’s wrong,” Julia Doscher tells them. “Just like sometimes I’m wrong.”

Schools around the country have banned ChatGPT, the popular artificial-intelligence chatbot like the one that’s assisting Alisha, citing concerns that it can spit out inaccurate information, enable cheating or provide shortcuts that could hurt students in the long run. But last week, the private Khan Lab School campuses in Palo Alto and Mountain View welcomed a special version of the technology into its classrooms.

Rather than solve a math problem for a student, as ChatGPT might do if asked, Khanmigo is programmed to act like “a thoughtful tutor that’s actually going to move you forward in your work,” says Salman Khan, the technologist-turned-educator who founded Khan Academy and the Khan Lab School.

Khanmigo was developed in concert with OpenAI, the nonprofit tech start-up that created GPT4, the underlying technology for the latest version of ChatGPT. OpenAI did not respond to a request for comment on the partnership.

The debate over whether to embrace or eschew new AI technologies reaches well beyond the classroom. Text-to-image generators like DALL-E and Midjourney have sparked debates about whether AI-generated images are visual plagiarism or whether they’re helping to spread misinformation. Tools like ChatGPT have ignited fears that they’ll replace some people’s jobs, while potentially making others’ easier.

The same day that Alisha and her classmates played around with Khanmigo, Elon Musk and a handful of leaders in business, tech and academia called for a pause in developing new AI technology.

“Hopefully we are showing how positive this can be,” Khan said in an interview. While acknowledging there are “real concerns” around AI, he sees the benefits far outweighing the risks. “Why should we slow down?”

New technology is a regular part of life and conversation in Silicon Valley schools, where many students’ parents work in the industry. The median household income in Palo Alto is nearly $195,000, and tuition at the Khan Lab School is more than $31,000 per year.

In Docher’s class of third-graders, one boy talks effusively about a chatbot called FloofGPT that he built with his dad and brother, meant to simulate a dog. And in a ninth-grade world history class students speak about APIs, or application programming interfaces, and name-check OpenAI, the company responsible for creating ChatGPT, in casual classroom conversation.

Many Khan Lab School students and their parents are already familiar with the concept of artificial intelligence and have likely played with ChatGPT at home, teachers and administrators say. Khan estimates that one-third of his 8-year-old’s friends’ parents work in AI.

“Parents for the most part are pretty excited about it,” Khan says. “Most people see the power here, they just want reasonable guardrails.”

Khan says he and his colleagues have spent thousands of hours building those guardrails for Khanmigo, but they might not always function as planned. Those protections include stronger moderation filters than those of ChatGPT. The Khanmigo chatbot reminds students that their teachers can see what they write. And if they enter something concerning — like a swear word or a violent sentiment — teachers and parents will be alerted, Khan says.

In Doscher’s class, one student sent a snarky comment to Khanmigo. After class, she pulled the 7-year-old boy aside. “I can see that maybe you’re frustrated,” Doscher said to the boy. “You wouldn’t talk to me that way.”

Doscher expected more “silly” questions during Khanmigo’s debut, but said she was impressed to see that most of the questions entered into the chatbot were math-focused. She noticed students posing more questions to Khanmigo than they might typically ask out loud.

As the class continues to use the tool, Doscher says she plans to explain it’s for helping — not for every question. If students were to use it too often, “I could see that really slowing down their pace.”

Neil Siginatchu, an 8-year-old boy in her class who’s doing sixth-grade math, had mixed reactions to Khanmigo. “It gives more detailed description than I would have run through in my head or write on paper, which can be helpful, or it can be annoying,” Neil said. “Because if it’s too detailed … it’s a lot to read and if I don’t need it, it’s just excessive.”

After Alisha and her classmates experiment more with Khanmigo and send those thumbs-ups and thumbs-down reactions on the chatbot’s responses, a team of humans plans to refine how the tutor interacts with students, Khan says.

After that, he plans to roll out its free Khanmigo tool to select schools around the country later this month. But both the area’s tech fluency and the privileged makeup of the lab school — where the student-teacher ratio is 10 to one — could affect its usefulness elsewhere, experts say.

Stephen Aguilar, an assistant professor of education at the University of Southern California, says he’s curious about how Khanmigo might function in different contexts.

“There’s often a presumption that big tech companies can take a tool and just move it to another place and it’ll work the same. But there’s the whole sociotechnical system that needs to be taken into account,” he says.

“Is there broadband that never goes down?,” Aguilar wonders. “What sort of computers do these students have access to? Do they have their own or do they have to share? Do they have tech support? Is there a person on the ground, or do I need to call a number?”

Khan notes that the other schools that will be getting access to Khanmigo will have support from Khan Academy in that rollout, with a lot of hand-holding along the way. Khan Academy already provides its educational resources to more than 500 school districts and schools in the United States, with a focus on districts serving students from low-income communities and students of color.

Chatbots have been criticized for going off the rails in conversations with reporters, saying they can think or feel things. The people and data that train them are inherently biased, prompting concerns about the widespread use of the technology which can espouse racist and sexist ideals. And the chatbots — while sometimes convincing — also can give wrong answers.

But in one day of testing at the Khan Lab School last week, Khanmigo appeared to stay in line. In the Khan Lab high school in Mountain View, ninth-grade history teacher Derek Vanderpool notes to these concerns to his students, reminding them that they should always double-check any information they get from Khanmigo.

Vanderpool directs his students to use Khanmigo to help them design questions for an upcoming Socratic seminar where they’ll debate whether the Mongolian empire was barbaric. The main lesson he’s driving home the entire hour is similar to Doscher’s caveat at the beginning of math class: Khanmigo isn’t magic, it’s just a source, Vanderpool cautions — one that should always be cited and double-checked.

If his students are using Khanmigo for their schoolwork, “tell me you’re using it,” Vanderpool says.

Toward the end of class, Vanderpool asks Khanmigo to simulate a conversation with Genghis Khan about the Mongols’ military tactics. The AI needs to be prodded before it will cite any evidence to back up its claims. Once it does cite a history book, the students have more research to do, Vanderpool says.

“Well, now the question is: Is it a real source? So you would want to do independent research to see: Okay, can I confirm that this source actually exists?”

[ad_2]

Source link