[ad_1]

Connect with top gaming leaders in Los Angeles at GamesBeat Summit 2023 this May 22-23. Register here.

The metaverse isn’t science fiction anymore. And to truly see that, you don’t have to look at the consumer and gaming virtual worlds. Rather, the industrial metaverse is leading us into the future, making sci-fi into something real.

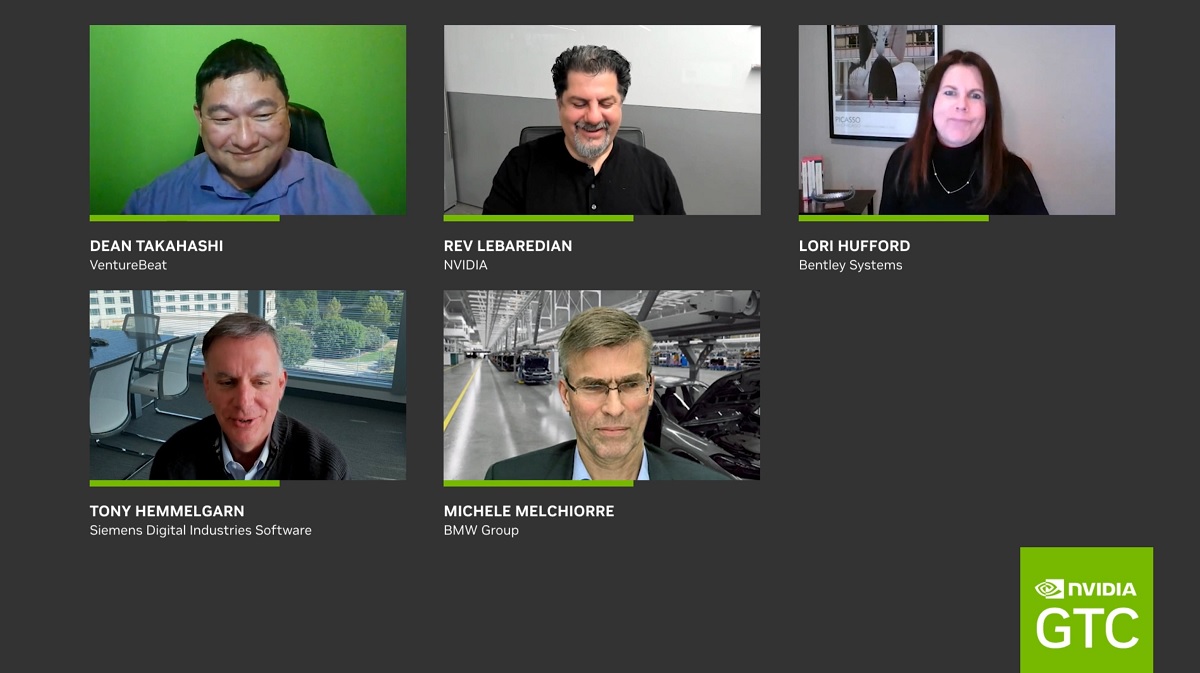

That was one of the subthemes of a panel I moderated at Nvidia GTC 2023. The title was Are We There Yet? A Status Check on the Industrial Metaverse. I’ve moderated multiple sessions on this topic at past GTC events, and this was an opportunity to gauge how far we’ve come.

BMW is just a couple of years away from going into production on a factory in Hungary. But it will open that factory in digital form to its employees early. This digital twin is an exact replica of what will be built in the physical world, and at some point it will absorb sensor data from the real world to get feedback on how the design should be changed.

Michele Melchiorre is the senior vice president of production system, technical planning, tool shop, and plant construction at BMW Group. He said during our panel that the physical factory will be constantly changed and improved, as well its metaverse cousin.

Event

GamesBeat Summit 2023

Join the GamesBeat community in Los Angeles this May 22-23. You’ll hear from the brightest minds within the gaming industry to share their updates on the latest developments.

Our other panelists included Rev Lebaredian, vice president of simulation technology and Omniverse engineering at Nvidia; Lori Hufford, vice president of engineering collaboration at Bentley Systems; and Tony Hemmelgarn, CEO of Siemens Digital Industries Software.

They all gave updates on how their companies and industries are leveraging the 3D internet to accelerate their path to automation and digitization with platforms like Nvidia’s Omniverse. We’ll have more talks on the metaverse at our GamesBeat Summit 2023 event on May 22-23 in Los Angeles. Hope to see you there.

Here’s an edited transcript of our panel.

VentureBeat: Welcome to our panel on the industrial metaverse. I’m very happy to moderate one of these metaverse sessions at GTC. Since we’ve done these for a few years now, it makes sense that this one is more like a status update. Are we there yet? We have a great panel to lead us on that discussion. I’d like to have them introduce themselves now.

Lori Hufford: I’m vice president of engineering collaboration at Bentley Systems, the infrastructure engineering software company. My team is focused on better creation, delivery, and ongoing operations with better infrastructure using digital twins.

Michele Melchiorre: I kicked off my career almost 35 years ago with Mercedes-Benz. Funny enough, as a young engineer I worked on the simulation of car assembly processes. Simulation tools at the time were more on a level like Pac-Man. After almost 20 years with Mercedes I went to work in Turin for Chrysler as a vice president of engineering. Then in 2018 I joined BMW as managing director for future plans. In 2020 I took responsibility for production system technical planning, tool shop, and plant construction. Now I’m developing with my teams at BMW a new plant which is clean, green, and digital. We have more than 23,000 users of our factory. With Nvidia we’ve reached a new dimension of digital factory planning. You remember last year we had the pleasure of giving the keynote at ISC on May 13. We could walk together through the digital twin of our new plant in Hungary. This was exactly two days before the official foundations were laid.

Tony Hemmelgarn: I’m president and CEO of Siemens Digital Industries Software. I’ve been with Siemens for about 16 years, when they acquired us. Prior to that–frankly, I’ve been in the PLM business my entire life. I worked for companies like Intergraph, STRC, UGS, before we all came in to form what we are today. Our business at Siemens today is broken into–my business is product lifecycle management software, as well as electronics design automation software. We have software for low-code software development, and also a marketplace for designers designing electronic components and things like that. Those are the big chunks of our business.

I’ve been in the business so long – Lori doesn’t even know this – that I was one of the first customers of Bentley Systems, many years ago, with a product called Pseudo Station, which eventually turned into Micro Station. I’ve been in the business a long time.

Rev Lebaredian: I’m vice president of Omniverse and simulation technology here at Nvidia. I’ve been here for 21 years. Previous to Nvidia I had my career in Los Angeles working in visual effects, specifically developing renderers and rendering technology for the movies. But since I’ve been at Nvidia I’ve worn many hats. Largely working in gaming technology. Over the better part of this last decade, working toward applying our gaming technologies to simulation of things in the real world, so that we can develop AI and develop the industrial metaverse and 3D web.

VentureBeat: This is a very big conference now. It reaches lots of people around the world. I assume that some people aren’t as familiar with the metaverse as you might think. Let’s start with a bit of a definition of the industrial metaverse. Tony, why don’t we get your crack at defining that?

Hemmelgarn: That’s the great thing about defining the metaverse. There’s lots of definitions, depending on your perspective and how you’re looking at it. When you think about an industrial metaverse, from our point of view–we see it as a place where real time, collaborative, photorealistic visualization meets real-world engineering and simulation. We see it as a place where users can immerse themselves in operations performance. It’s a place for making decisions, decisions with confidence in the engineering process. It’s a long definition, but you’re trying to bring together all those different components into what it means.

VentureBeat: That business simulation part also makes it unique compared to other kinds of talk about the metaverse, especially in gaming. They’re not as concerned with precise simulation. Lori, do you want to also weigh in on your definition:

Hufford: You’ll see some similarities to what Tony was saying. Bentley is focused on infrastructure. In infrastructure, digital twins are the building blocks of virtual worlds that will allow groups to interact and collaborate and solve problems, such as making infrastructure greener and more sustainable and more resilient.

We don’t think the metaverse has arrived yet, but we can see its outlines. It’s immersive. It’s a 3D geospatial user experience. It’s real time, with real time interactions and real time data. It has to be engineering-grade. You just talked about that. No loss of data fidelity. Always on. The data is persistent and changes are tracked. It’s also interoperable, with content not tracked into just one platform. Finally, and very important, it’s collaborative, powering multi-user interactions.

Lebaredian: From Nvidia’s perspective, the metaverse in general is an extension of the internet. There was a pre-web internet, where the interface was just text. You would type things in a console. I’m old enough to remember that. I was a teenager using the internet before the web arrived. The web transformed everything when the interface to the internet became 2D images and text and all of this multimedia stuff mixed together. It became a lot more accessible. More people could now use the internet to interact with each other. What we found was that the internet is used for a lot of things, not just entertainment and games and watching movies and videos. We use it to build things. We use it to do our work.

We feel that the industrial metaverse in particular is going to be an extension of the internet to a 3D interface, one that’s more like the world around us. In doing that, in enabling that fundamental capability, we’re going to use this 3D web to do a lot more than play games and entertain ourselves. That will be a big thing still, but we’ll use it to build our factories, to build our cities, to build bridges and all of the great stuff that the other panelists here are a big part of making happen.

Melchiorre: I’d add a little thing here, where we’re talking about having fun and playing in games. It’s quite important that it’s easy to use for an industrial application. It’s as much fun as playing the game. It could be very interesting for this to be attractive for young people to join us. They see that this is really cool stuff. It’s not old and boring, sitting in an office. We see a lot going in this direction.

Lebaredian: It’s a good point. This new generation has grown up with 3D video games. That’s a natural interface for them. They feel very natural inside virtual worlds. We need to build new tools and systems that match their world, not just ours.

VentureBeat: The term “metaverse” got started in science fiction 30 years ago, with Neal Stephenson’s novel Snow Crash. Stephenson is actually in the metaverse business now, doing things for the infrastructure of the metaverse. But we’re probably multiple waves into the idea of metaverse development now. Michele, can you talk about what we’re seeing materialize so far in the first wave of metaverse development?

Melchiorre: If you look at what we’ve done in the past so far, we have a lot of different systems standing next to each other. But they’re not connected. We have 3D object buildings—I’m talking about production systems that are working in our factories. We have 3D data on our buildings. We have the structure parts. We have layouts. We have facilities. We have technical building equipment. And of course our products, the cars. This was all standing one next to the other. With a virtual factory in the metaverse, the digital twins, we bring all these things together. We can use them together at any location where people are. That’s really where we see it growing.

Hufford: When many people—you alluded to this in your introduction. When many people think of the metaverse, they think of visualizations on a 1:1 scale. But to solve real-world problems, such as increasing demands for infrastructure to meet economic, community, and sustainability goals, and doing that with a hybrid workforce, it has to be much more expansive and collaborative. No one organization can do it alone.

In the first wave we saw a tremendous collaboration between leading technology organizations and industry partners, such as my esteemed panel members, combining what each organization and platform does best into some pretty exciting stuff. For example, the Bentley iTwin platform for federating engineering data to establish infrastructure digital twins, and the Nvidia Omniverse platform for AI enhancing visualization and simulation. And then Cesium for geospatial streaming. That collaboration is really a foundational element of the first wave.

Hemmelgarn: I agree with my colleagues and the comments there. I’d also say that it’s like—Lori mentioned the digital twin. The digital twin is never finished either, both from a product definition, the product itself, the digital twin for the manufacturing site, but also in what we provide as software providers. How do you further define that digital twin? The digital twin has been around for a long time. Early on it was about assembling things and making sure they fit. Then it was, okay, we have that knocked out, so what do we do to simulate manufacturing? What do we do simulate plan manufacturing? How do I cover the functional characteristics? How do I cover software and electronics? All the things that go into this. Manufacturing engineering. Manufacturing planning. Automation. All those things build out a digital twin.

The same is true in the metaverse. It’s never going to be finished. We’ll keep adding to it. We’ll make it more and more real. Some of the collaboration things on the front end, the high-end visualization, those are there, but we have a long way to go to get to where we need to be. It also depends on your definition, like we said earlier. We have some framework to build upon, but we have a ways to go yet.

VentureBeat: If we think about where we are now, the progress report, can we get started on that discussion?

Hufford: I like to measure progress in terms of real-world impact. Many leading organizations have demonstrated benefits to people and the planet, as well as their businesses. As an example, the Tuas water reclamation plant, which is being built by PUB in Singapore, their national water agency—it’s a one of a kind, multi-discipline mega-project to build a treatment facility for industrial and household wastewater. A project of this scope and complexity, it has major challenges in coordinating and communicating multiple contractors, up to 17 different contractors. By leveraging iTwin federated models and LumenRT for Nvidia Omniverse, project stakeholders have been able to review safety, quality, and design challenges.

There are lots of other examples, such as WSP winning projects and strengthening stakeholder engagements on important infrastructure projects by producing compelling visualizations. For example, the I-5 bridge replacement project between Oregon and Washington in the United States. Throughout the world, there is tremendous progress being made and demonstrated using metaverse technologies to make real-world impacts on people, the planet, and their businesses.

Hemmelgarn: Good progress on things like collaboration, high-end rendering, those kinds of things are very good. Immersive still needs a lot of work. I would also say that, in my opinion, success is when—it goes back to a bit of what Michele says about making it easy for people. Success, to me, particularly for someone that provides software, is when it’s repeatable, and I can easily implement it. I can get to value very quickly. This is why we’re working so closely with Nvidia. How can we make this a lot of easier for our customers to be able to deploy and get to value quickly? That’s work we still have to do.

What doesn’t work is a bunch of one-off projects. We need a product and solution set that our customers can implement easily and quickly to get to value. Those are things we’re still working on.

Melchiorre: If it’s too complicated to use, you’ll still have people like in the past who are able to use it. They’ll do special training. They’ll make up a small community. That’s not what we want, though. We want all our planners to work on these systems. Especially during the last years, during corona, it was really helpful for us, because you couldn’t travel. The methods of how we work, how we plan the factory, how we set up the factory, and then run it, they didn’t work anymore. We were simply not allowed, for example, to travel to China. We had to do everything on a digital basis.

Luckily we started with metaverse and this collaboration. It was easy to use. We have these open data formats where we can combine all our data from our different planning tools. We can’t throw away everything we have. We need to bring it together. The visualization is great. You don’t need to be there. The people sit in the U.S., in Europe, in China, and they collaborate.

What we see right now—I was talking about our plant in Hungary. There’s a new plant and a new product coming. We have new results from stress testing. We need a part, a piece of metal. It’s so easy to integrate that in the digital twin. We might need to add, for example, a robot. That’s a different part. We can shift the equipment live. Again, this has to be easy to use. Then people pull into it. That’s what we see right now.

Lebaredian: I agree especially with what Tony was saying about this being a journey. It’s essentially endless. We’re going to keep layering on more and more realism, more simulation, more aspects to what we’re simulating in the world for these digital twins. That’s why it’s so great. It’s awesome working on endless problems. That’s what we started with Nvidia. That’s why we chose computer graphics and rendering as our first thing. We felt it would never be good enough. There’s always more good work to do out there.

One thing that surprised me in the past few years—we started Omniverse five years ago or so. Or we named it that five years ago. Our thinking was that the first industry to adopt it, because it’s a general platform, would likely be media and entertainment. Then next would be AEC, and the last would be industrial manufacturing. They would be slower in doing this kind of adoption. And we’ve been surprised to see that almost inverted. The companies that are most excited and demanding to have these real time immersive digital twins are companies like BMW, one of our most important partners for Omniverse.

It’s somewhat of a surprise to me, but it seems like we’ve hit a threshold where the technologies we have are finally good enough to realize some of the things we wanted to do. The realization has hit the industry, that they can’t actually build all of the complex things we want sustainably with all of the constraints coming at us from the future, without first simulating them. I was surprised to see how much demand there is, how much interest. But for many of these companies it feels like it’s existential. If they don’t figure out how to create better digital twins and simulate first, we’re just not going to be able to create the things we need.

VentureBeat: Many people thought gaming would lead the way, and in some ways it has. If you ask gamers about the metaverse they say, “You mean World of Warcraft? That’s been around for almost 20 years.” Yet it feels like these tools we’ve been using, game engines and other tools, for that industry—that’s resulted in a bunch of silos. It’s not an interconnected metaverse there. It’s a bunch of online games. It’s interesting to consider how much more will happen in that industry and other industries around collaboration opportunities. Are those still very useful, the tools that originated in film and gaming? Or are we moving on to other tools for the industrial metaverse?

Melchiorre: We need something like online games. That’s exactly what we’re doing and what we’re using. When you play these games, you have fun. If I’m sitting together with my engineers, they’re having fun with these planning tasks. They sit together virtually, even if they’re distributed all over the world. They can work together on one problem at the same time. It’s quite different from the past. When I had to do a task, then I shared it with my colleagues. The next colleague would be doing something and I would get feedback. It was quite a slow process. Now, all the involved parties can discuss and work together at the same time. It really speeds up the planning process. We’re able to realize our plans much faster and at lower cost. The planning part is a quite costly part of the project.

VentureBeat: Rev, maybe we can move to an interesting question from the perspective of all of GTC here. I’m told that there are 70 or so sessions related to generative AI at GTC, things like ChatGPT and AI in general. How do you think that’s going to affect the industrial metaverse?

Lebaredian: It’s a good question. We started building Omniverse, and even before we named it Omniverse—we had this idea of using gaming technologies to do simulation. We started that with the invention and the introduction of machine learning and deep learning into this modern era 10 years ago. Soon after that the first deep learning models were trained. There was AlexNet and things like that. We realized that probably the most important component of training systems like these is getting the data to train these networks, to create these AIs.

Most of the data is going to be needed is stuff about the real world around us. We want to train robots to perceive the world. We want to train robots to act upon the world. They need to understand how the physics of the world behave. We then realized that we would not be able to get all the data we need fast enough and cheap enough from actually capturing it in the real world. We would have to generate this data somehow. That led to us thinking, “What if we use a lot of the real time gaming technologies to more accurate simulations of the world?” Simulations of how the world looks and how it behaves, so we could train these robots and these AIs.

That’s what we’re doing. If you look at the things we’ve built on Omniverse for ourselves, we have a simulator for our autonomous vehicle system, for Nvidia Drive. We call it Drive Sim. We also have a robotics simulator called Isaac Sim. We believe that in order to create more and more advanced AI, we’re going to have to give these AIs life experience that matches the real world. These LLMs, large language models, are amazing, but they’re only trained on text. They’ve never actually seen the world that the text describes. They’ve never experienced that world in any way. The way we’re going to get them to become even more intelligent and able to understand the world around us is by giving them virtual worlds. That’s why they’re fundamentally liked.

It also works in the other direction. We need world simulation in order to create advanced AI, but we’re also going to need advanced AI, generative AI, to create virtual worlds. Creating worlds is really hard. There’s a lot of expertise that goes into creating these worlds. There are lots of tools, and only a small number of experts know how to use those tools. Using generative AI, we’ll enable more and more people. We’ll expand the set of people who can create things inside the worlds. There’s a loop forming here, where we’ll use generative AI to create virtual worlds, and we’ll use those virtual worlds to create advanced AIs that understand that and can help us create virtual worlds and so on. In the process of creating these virtual worlds, we’ll be able to create things in our real world much better, because the virtual worlds will match the real world more accurately.

VentureBeat: It’s an interesting point, and I think we’ll get to that in our digital twin discussion coming right up here. It definitely feels like there’s a path toward collaboration and reusability here that hasn’t really been here before. It hasn’t been mentioned so far in our progress reports. Are we getting to the point where some of this technology can be reused over and over again? But before we get too far on that, I would say that we’ve barely mentioned digital twins so far. Maybe we want to dive a little deeper into this notion. How do the digital twin and physical twin improve each other over time?

Hemmelgarn: Like I said before, the digital twin is neverending, both for the product—I define a digital twin of a product as neverending, because it goes through its life cycle of use and recyclability and all the things that happen after that. But then there’s also the definition from my point of view as a software development company. How do I better define that digital twin for our customers?

The value of the digital twin is how closely the real world meets the virtual world. The closer I can make that relationship, the faster I can make decisions with confidence about what’s going on. If my digital twin doesn’t fully represent—for example, a moment ago, I said that if you can’t see the electronics in the digital twin, but you only see the mechanical components, that’s not a valuable digital twin. You have to keep building that out. That’s what we call a comprehensive digital twin. Or it doesn’t represent manufacturing and what I’m doing there. It’s only focused on design.

As we see it, one thing that starts to come together when we think about this idea of metaverse and digital twin and how we continue to improve this over time, it’s this idea of closing the loop, bringing the data back and forth. In other words, what I learn in the real world, how do I bring that back into the virtual world, so I can make changes and iterate through this process? That’s something, in my organization and my software, that we’ve done for many years, the closed loop digital twin. In physics-based engineering tools I know how to do that very well. But with metaverse now we’re thinking about other things. We have business systems, ERP systems, costing, sustainability reports. All these types of things are also things that have to be built into these models so I can make decisions in confidence not just based on the physics that we do well inside our software, but all the other pieces that come together. Again, our goal is, how do we make those pre-defined, easy solutions, so our customers don’t have to build all those things, but can still take a comprehensive view and get to value quicker using these solutions?

Melchiorre: We do a lot with digital twins. For our production system that’s absolutely necessary. Of course also for car assembly, it will never stay as it is. We’ll have continuous improvement. We need this on both sides: for the digital twin and the real world. These need to work together. What we can also do now, to give you an example—you’ve heard of gemba walks, where you go down the shop floor and run through the shop floor to see what you get. Now we can do virtual gemba walks using the Omniverse. That’s helpful. You can do things much earlier. You can do it without even being on the site. As a simple example, if you have to check emergency rules, you can do that on the digital side. Emergency rules for firefighting, for example. If we have the model, the digital twin, and the real thing, we can bring them together. That’s very, very helpful.

As Tony mentioned, it has to be easy to use software. Not just for the planner, but also later on, once we have a new factory set up. Then it has to be easy for the guys who are running the factory. It’s planning, setup, and then operation. We want to be able to run the digital factory in parallel with the real world. Then we can always compare. Maybe in the future the digital factory, the digital twin, can be one step ahead and let us know if something is about to go wrong. If we have a perfect copy, we could know in advance what will happen. That’s a bit—after tomorrow, maybe. We’ll take one step after the other and we’ll develop. We’ll get closer and closer.

Lebaredian: An important thing within what Michele and Tony were saying there is that this is an endless thing. We can keep making digital twins better and better. But the steps along the way are still valuable. There’s a lot of stuff that each step unlocks, and that is valuable. What you hear from Michele there is that already some of the things they’re implementing at BMW are unlocking value for them, making it better for them, making it easier and more efficient to design their factories. But we’re not done. There’s a lot more work to do.

Melchiorre: I can give another example. If I have an assembly line, it doesn’t matter how good it is. We’ll always improve it. You won’t ever get to an end. I expect the same of the digital twin. It’s endless. It doesn’t mean we’re not happy with the result. But we always have to move forward.

VentureBeat: If you’ve collected a lot of learnings and adjusted to how you’re developing for the industrial metaverse, what are you doubling down on? What are you doing less of? What represents the real opportunity?

Hufford: To pile on a little bit with the concept of digital twins, at Bentley we’re very focused on advancing infrastructure digital twins. By opening up the data contained in engineering files, it provides a tremendous amount of value across the end to end life cycle of infrastructure, from planning to design to construction to operations. But key to the adoption of these benefits—we’ve been talking about these tools being easy to use. In addition to that, we want to make sure that we can do this by enabling this technology through their existing workflows, by augmenting their existing workflows.

We’re focused on productizing these capabilities so more infrastructure firms can benefit, leveraging the work that they’re already doing. Adding these infrastructure digital twin-based workflows to existing file-based workflows—we talked at the beginning of the panel about partnerships. We’re definitely working on that as well, to enable working with Nvidia and Omniverse, to enable these real time immersive 3D and 4D experiences using infrastructure digital twins. But again, that key is augmenting their existing workflows and productizing the capabilities to bring them to firms and further their benefits.

Melchiorre: If I look at the workflows, the digital twins and the metaverse, Omniverse, allow us to change workflows, or make workflows much faster. In the past we couldn’t bring all these stages together. We had data in different silos. But now, bringing all the data together in one location where all the planners can meet there with all their data, we can do these things in parallel. We really speed up. That’s a big value.

Hufford: Absolutely. Being able to look at the design in the context of the entire design, not having the data in individual silos, that’s really a huge benefit.

Melchiorre: If you make changes in the Omniverse world, the changes to the data are written back to the original system. That’s a big value. It’s really speeding up the process of improvement.

VentureBeat: Rev, where do you think we are on the company to company collaboration here? In some ways there’s some risk that we have a bunch of collaboration within companies about creating their version of the industrial metaverse, but company to company interaction may be something more valuable in the long run. I wonder about things like interoperability and portability of assets. How are we coming along on that front?

Lebaredian: My personal opinion, the sense that I have—working on Omniverse the past few years, developing partnerships with Lori and Tony at Bentley and Siemens and others, my impression is that overall, most of the companies we’re talking to that are big players in creating the tools for these systems, they all recognize that no one of them is going to be able to create all the tools and all of the systems everyone needs to build everything. It’s actually never been true that any major endeavor uses tools from just one vendor. No one vendor can have the expertise in everything.

There’s a real enlightened sort of thinking around interoperability that’s been growing. Now I think it’s more about—it’s not whether we should do it, or how we should do it. It’s just very hard. The technical elements of building data models for describing everything and making that interoperable is an unsolved problem. It’s a big challenge in and of itself. But I have faith in our engineers and our people. As long as the will is there, it will happen. I see momentum toward that.

At Nvidia, our unique contribution here is that we’re not a tools creator. We create systems and algorithms and technology that’s lower in the stack, below the tools, below the workflows. Our unique contribution here is that we can be somewhat more neutral than a lot of the entrenched players in the data formats and such. We just want to be able to use our computers with the data people already have. But before we can do that, it has to all aggregate together into one harmonious thing, so we can help contribute there and broker these discussions between the various players.

Hemmelgarn: One of the tenets of my organization is that you have to be open. You can’t be a closed organization. You can’t do everything for everyone. Now, are there areas where I could say, if you use much of our solutions, the integration is much better and much easier? Yes, at times. But you still have to be open to other solutions that are there. For example, our 3D modeling physics-based engine, the math engine for that is used by many of my competitors. The value to my customers is that the geometry never has to be translated. It’s the same geometry from system to system.

I would also say there’s an aspect of this, when you talk company to company, around the customers and how they work, how they collaborate. For example, in automotive, we have teams that are setting the de facto standard almost for managing data in the automotive world. We’ve been asked a lot about how you protect your IP as a tier one provider to automotive. In some cases you have a tier one that’s working together with another tier one on one vehicle program, collaborating, and then on the next vehicle program they’re competing with each other. You have to make sure you protect data. That’s why it’s not just about going into an environment to collaborate. It’s also making sure you have the engines behind it that have the data management, the configuration, the security, and all the things that are already there to protect your IP and your design rights. Those things have been built into this as well, to make sure we protect—it’s great to collaborate, but make sure you take care of someone’s IP and what they need to protect in their own environment, while still collaborating.

Hufford: I’ll double down on the need for openness and extensibility, at all levels. It really is the foundation for ensuring that you have this ability for portability, and for each platform and each organization to bring their unique value to solve bigger and more complex problems.

VentureBeat: Rev, do you want to point us to some of the road ahead, some of the next leaps you would like to see happen in the industrial metaverse?

Lebaredian: We’re on the cusp of something big here. We’ve been working toward this for many years. Lori and Tony have been working toward building digital twins, it sounds like, for decades. We’ve come into this game more recently. It’s been years for us, but not as long as that. I think we’re about to do it. You can hear the excitement in Michele’s voice when he’s talking about their digital twin.

Moving forward, from my perspective I see—there’s a new set of resources, things that just didn’t exist before in terms of computation. The cloud and what that unlocks in terms of being able to compute more than was ever available to us. Connectivity, so we can transfer more of this data around seamlessly to collaborate in real time. All these things have been happening around us, and they’re accelerating. It’s finally starting to converge with our dreams of what a digital twin would be, and what simulations can be, for decades now. I’d keep an eye out for that. Within the next year or two we’ll see some magical stuff happening with the convergence of these things.

Melchiorre: I can’t wait to see when we have the real opening of our plant in Debrecen, Hungary. This will be the first full digital twin. We’ll have a brand new plant with a digital twin. It’s one thing, as I mentioned before, to plan it and set it up. But within two years’ time we’ll officially start production there. I really can’t wait to see how good that digital twin is, and if we can run these two factories in parallel.

Hufford: Certainly there’s going to be an evolution of communication, whether it be people to people, people to assets, assets to people, and even assets to assets. Just thinking about a hub that says, “Hey, I need some maintenance now.” The engineer that needs to go out and perform that maintenance has been trained using augmented reality and knows exactly what to do. They can call on experts. The proliferation of communication is extremely exciting. I’m also excited to see, as this technology becomes more productized, the adoption curve accelerating over time. I think it’s going to be very exciting.

Hemmelgarn: I kind of compare this to the industrial internet of things. It was way overhyped early on. What happened was, we had to go back to real solutions and real answers that made a difference. Then I think it was underestimated as to what it would mean to people. I’d say the same thing about additive manufacturing. Way overhyped. Early on people would gush about how if you broke your coffee cup you wouldn’t have to go to the store. You’d just print your own. There was some silly stuff going on early on. But if you look at what’s happening in additive today, people don’t understand the major changes made in manufacturing. Same thing with IOT. Major changes.

I think the same thing is true here. The metaverse has probably been overhyped for a while, but real value will be coming. It just takes a bit of time. Like Rev said earlier, the great thing about this industry—I tell my team this. One of the greatest places to work is developing software like this for digital twins, because you’re never finished. We got to automotive companies and they say, “Take 50% out of my R&D cost over the next five years.” You do that and you’re all done and you celebrate. Then you have to do again. We start over one more time. That’s the great thing about this space. You’re never finished. We run a risk of overhyping it, but then there’s also a risk of underestimating what it means in the future. I think both are kind of true where we are right now.

VentureBeat: I like how you guys are on the forefront of making all that science fiction come true. Who would have thought that the industrial people of the world would lead us into this future? I look forward to the day when enterprises like yours can create things and develop pieces of the metaverse that are going to be useful to gaming companies and vice versa. Other industries can all start collaborating with each other in this digital world that we’re going to move into. Then we get into solving problems like portability of assets, universal interchange, and getting to reusability of things that get created, so not everyone has to feel like they’re working on this all by themselves. There’s a community of people who can help each other. That would be wonderful.

GamesBeat’s creed when covering the game industry is “where passion meets business.” What does this mean? We want to tell you how the news matters to you — not just as a decision-maker at a game studio, but also as a fan of games. Whether you read our articles, listen to our podcasts, or watch our videos, GamesBeat will help you learn about the industry and enjoy engaging with it. Discover our Briefings.

[ad_2]

Source link