[ad_1]

Robert Triggs / Android Authority

How far can you, or rather, should you push an image through editing? It’s a bit of a philosophical debate that I’ve previously dabbled in and ranted against. But the fact of the matter is AI is everywhere and is a critical part of modern computational photography. Increasingly though, AI is becoming a part of the post-photography experience too. And Google is leading the charge here. Two years ago, the company introduced Magic Eraser with the Pixel 6. The seemingly magical object removal experience made it a cinch to eliminate errant objects, people, and more with a single tap.

But removing objects is just one aspect of photo editing. Google’s upcoming Magic Editor promises a whole lot more. This year, at Google I/O, the company debuted a slew of upgrades to the built-in photo editor app on Pixel hardware. The updated app promises to use a combination of generative AI and semantic segmentation to ease up experiences like moving objects around within a frame, resizing them, changing the color of the sky, and more.

That’s very cool. But I’ve got my inhibitions. For one, Google has a history of announcing excellent additions like the ability to remove fences to the photo editor but not delivering on them. Additionally, cool as these features are, I don’t want to wait for Google to release them. More so, when I can pop open Photoshop on my computer and try them out right now. That’s precisely what I’ve been doing since Adobe dropped the Photoshop Beta with generative AI features.

Bear in mind that the generative AI features are, so far, only available on the desktop beta version of Photoshop. However, since this is a cloud-connected feature that doesn’t rely on local processing, I’m hopeful that Adobe will bring it over to Photoshop Mobile sooner or later making it a more direct competitor to Google’s Magic Editor. Anyway, I digress. Let’s get back to the matter at hand — does it deliver the goods? I put the app through many use cases that align with everything the Magic Editor promises and perhaps a touch more. Here are the results.

Have you considered altering images using generative AI?

8 votes

Extending the canvas beyond the original photograph

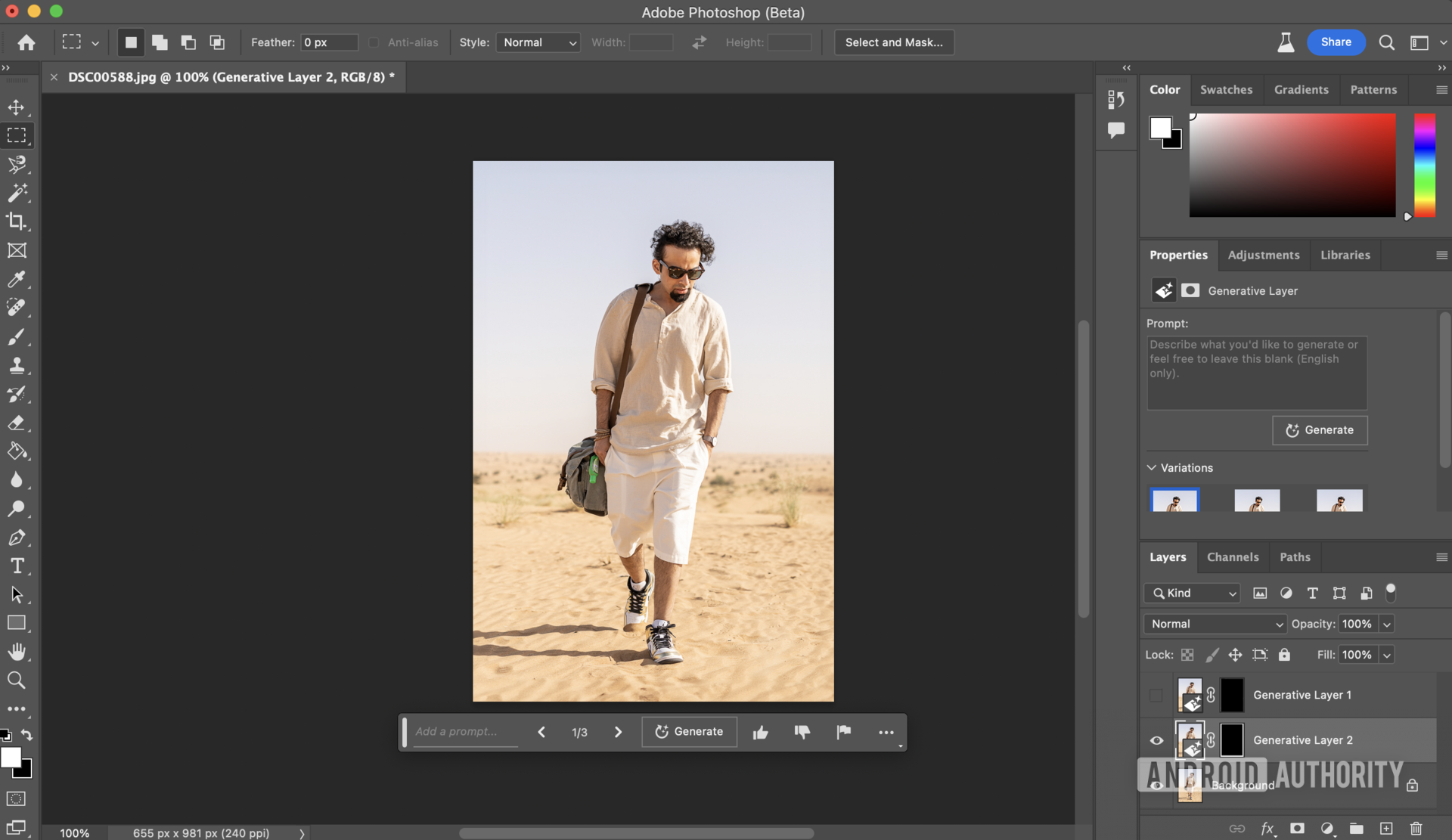

Dhruv Bhutani / Android Authority

I’m just as guilty as the next guy about framing a picture for social media. Striking my best Nathan Drake poses in the desert, this particular setting made for a pretty iconic shot for an Instagram story. However, it doesn’t shape up quite as well to put up as a photograph on a website or even on Instagram. A more traditional 4:3 format would be better suited. So, I did the obvious thing. Dropped the shot into Photoshop.

Dhruv Bhutani / Android Authority

After that, I extended the frame a bit and tapped the generate button. Within seconds, Adobe’s generative AI magic did the trick and filled up the image to a close approximation of the actual scene. Okay, sure, I distinctly remember some palm trees in the distance, and AI can’t possibly know the actual setting. However, for my purpose, the photograph more than suffices. Score for generative AI!

Meme potential

Dhruv Bhutani / Android Authority

Gifs and memes are fine, but my group of friends and I tend to go all in with the jokes. Trust me; it is possible to get pretty creative with stickers and basic image editing tools when you want to troll a buddy. However, Photoshop’s generative AI tool lets you go a whole lot further.

Dhruv Bhutani / Android Authority

As part of a recent group chat, we created dinosaur versions of ourselves just to get the point across that we were getting old. All I had to do was to create a selection box around my head, add in a few keywords along the lines of a thinking dinosaur, and tap generate. That’s it. Adobe’s AI did all the rest ‘auto-magically.’ Guess who got the most laughs?

Removing objects and generating backgrounds

Dhruv Bhutani / Android Authority

Google’s Magic Eraser function is pretty good at removing people and objects from a background, but it isn’t perfect. Limited by compute power and on-device ML, it often leaves streaks or segments within the image with minimal scope for additional editing.

Google’s Magic Eraser is great, but it isn’t perfect and doesn’t leave much room for manual editing.

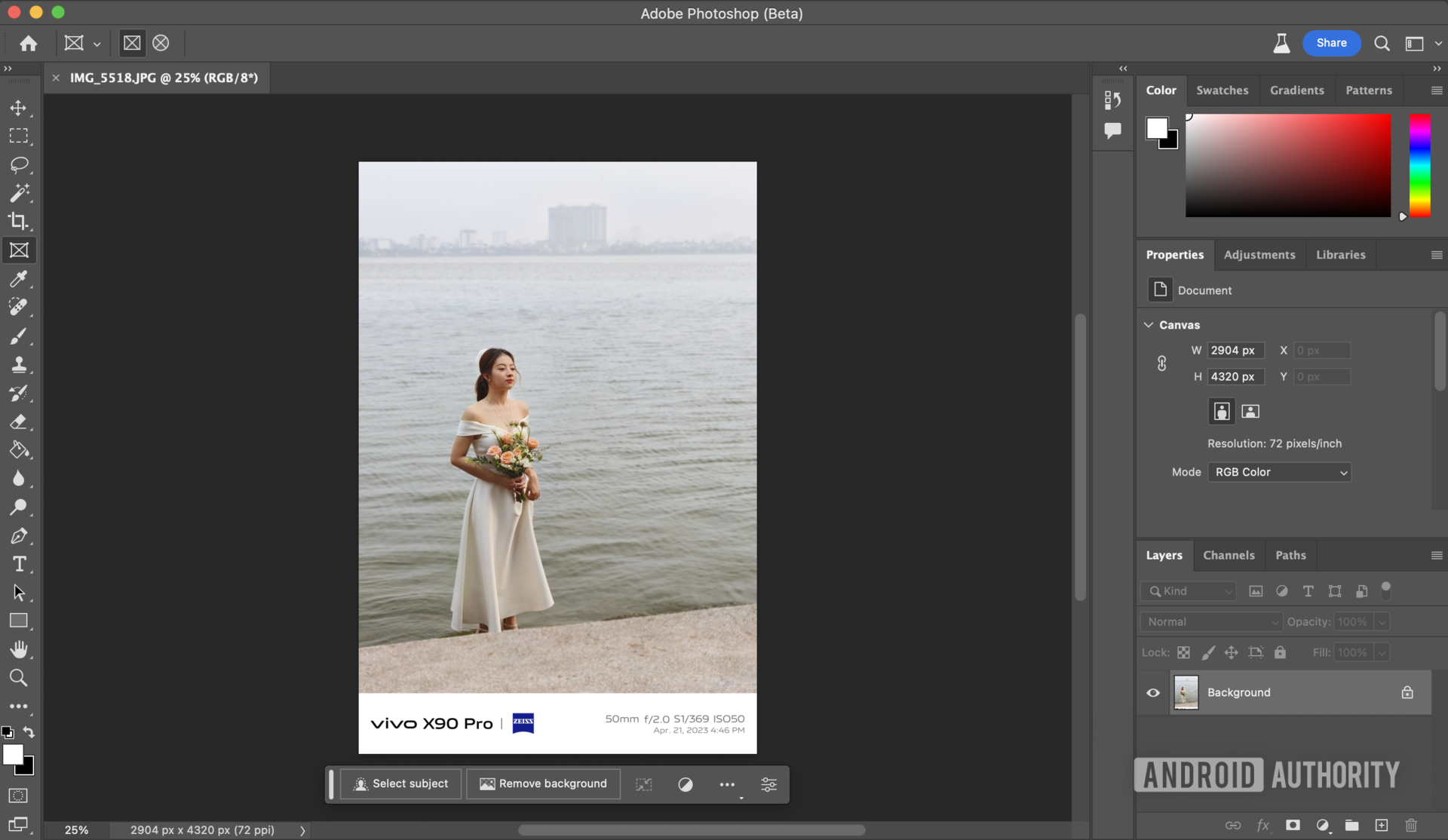

A couple of months back, I managed to grab this shot of a bridal photoshoot in Vietnam. Unfortunately, I forgot to toggle off the phone’s built-in watermark feature. I could crop in, sure, but it’d take away from the framing of the image. So, back to Photoshop we go.

Dhruv Bhutani / Android Authority

With a quick selection box around the watermark and a tap of the generate button later, Photoshop presented me with a believable and true-to-life image using its generative models. But I wanted to take it a step further. I wanted to take a stab at removing the gorgeous bride from the shot altogether. How well would generative AI work for that? The results speak for themselves. I couldn’t spot any aberrations even when zooming into the image.

Moving an object within a frame and then refilling the space

Dhruv Bhutani / Android Authority

Okay, so we’ve established that expanding an image, removing the background, and moving objects within a shot are all possible. But I wanted to see how far I could push generative AI to create a realistic-looking shot. So, I took the same image of the bride and removed the watermark along the lower edge. I then moved the subject and used Photoshop’s generative AI feature to fill the background.

Photoshop’s generative AI can be less than perfect when matching exposure and contrast levels in a photograph.

My next step was to extend the background. This is where you start observing some of the shortcomings of AI-based editing. The difference in exposure levels and contrast creates a line running through the image. While it can be easily corrected with a few steps, it’s worth remembering that chasing photorealism still requires manual work.

Bonus: Fully altering the photograph

Dhruv Bhutani / Android Authority

For the next step, I decided to switch out the background for a more aesthetic sunset skyline. And finally, I used the generative fill function to pop in some tropical foliage to fill up the blank space along the bottom left of the image. I’ll be honest here; the results aren’t perfect, but with a bit more time and fine-tuning, they could be improved a lot. More importantly, scaled down to social media size, I doubt anyone would know the difference.

I’m still not comfortable with how easy it is to alter reality, but the tools can be very useful in the right hands.

I know, I’m probably going to get roasted for this in the comments section. After all, I’m the same guy who lambasted Google’s announcement of the Magic Editor. Truth be told, I’m still not sure how comfortable I am with how easy it has been to alter reality to a small and substantial degree.

Ultimately, as always, it comes down to the end user and how they exercise diligence to ensure no malpractice. I’d love to see a standardized digital watermark that doesn’t mar the image but can quickly inform viewers that the image has had some form of generative AI applied to it. Until then, if you want to taste Google’s Magic Editor, you don’t need to wait. Adobe’s Photoshop Beta has you covered, if you want to try it out.

[ad_2]

Source link