[ad_1]

An Arizona mother described a frightening experience with scammers using artificial intelligence to fake her daughter’s voice in an extortion attempt.

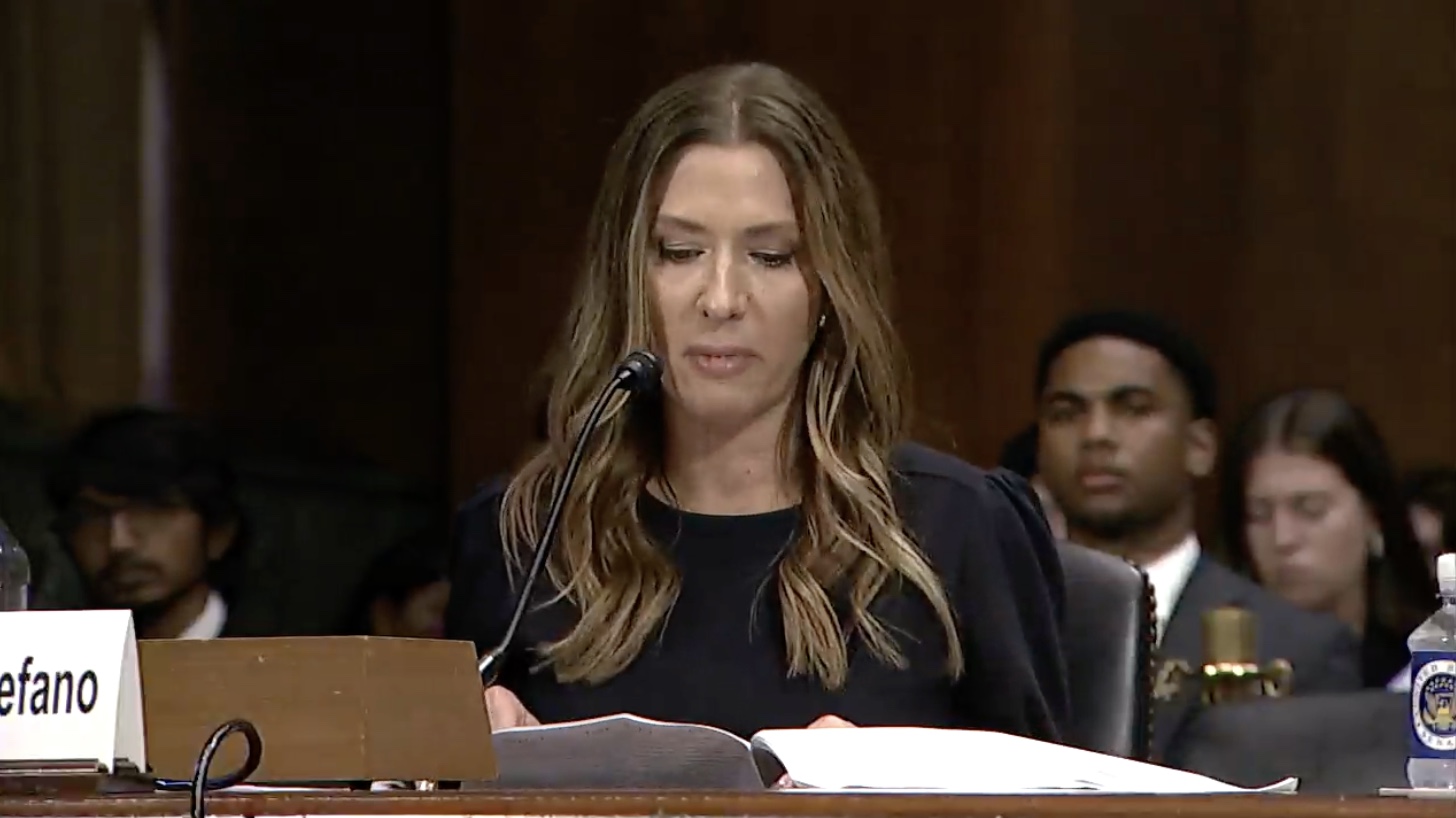

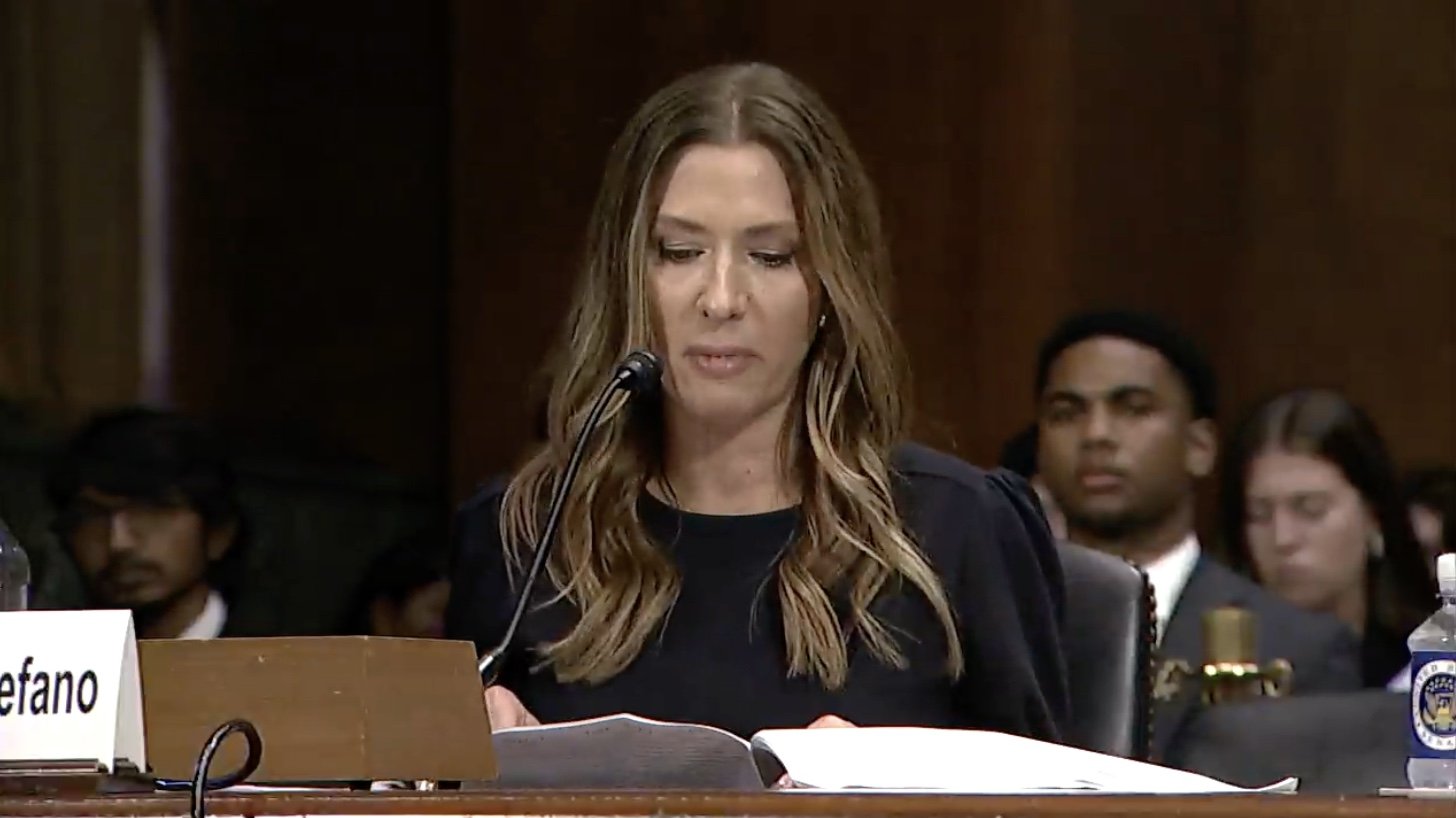

Jennifer DeStefano testified at a Senate Judiciary Subcommittee hearing on artificial intelligence and human rights Tuesday.

“AI is revolutionizing and unraveling the very foundation of our social fabric by creating doubt and fear in what was once never questioned, the sound of a loved one’s voice,” DeStefano told lawmakers.

She said the experience started when her husband took their older daughter Brie and their youngest son to Northern Arizona to train for ski races while she stayed home in the Phoenix area with their older son and youngest daughter.

She had just gotten to a dance studio for her daughter’s rehearsal for a recital.

DeStefano got a call from an unknown number. She answered it in case her daughter had been injured on the slope and it was from a hospital.

On the other end, she heard her daughter, Briana, sobbing and saying “mom.”

“It was my daughter’s voice. It was her cries, her sobs. It was the way she spoke. I will never be able to shake that voice out of (my) mind,” she testified.

Only it wasn’t. It was all AI-generated to sound like her daughter.

Then a man’s voice came on the line, and threatened to drug the girl and dump her in Mexico if she didn’t pay a $1 million ransom.

All the while, DeStefano said she could hear what she thought was her daughter in the background desperately pleading, “Mom, help me!”

Another mother in the studio called 911 while she remained on the call negotiating the ransom down to $50,000.

The other mother told her that the 911 worker was familiar with an AI scam involving replication of a loved one’s voice.

DeStafano was not convinced until she reached her husband who found their daughter resting in bed.

When she realized her daughter was safe, her fear turned into fury.

“To go so far as to fake my daughter’s kidnapping was beyond the lowest of the low for money,” DeStafano said.

Cases of AI technology being used to defraud individuals are on the rise.

The Department of Homeland Security has issued a warning regarding this technology, classifying it as a burgeoning threat within the broader scope of synthetic media.

The scams use AI and machine learning to craft believable, realistic videos, pictures, audio, and text that depict events that never happened.

DHS advises people to independently verify requests for money, and to stay alert for typical scam tactics, which often involve requests for gift cards, cryptocurrency, or wire transfers.

The Senate committee has held a series of hearings on the threats that artificial intelligence poses.

[ad_2]

Source link