[ad_1]

As cybersecurity professionals must manage many tools and often overwhelming amounts of data from multiple sources, Microsoft Corp. today announced Security Copilot, a new tool designed to help simplify their work and address security threats with an easy-to-use artificial intelligence assistant.

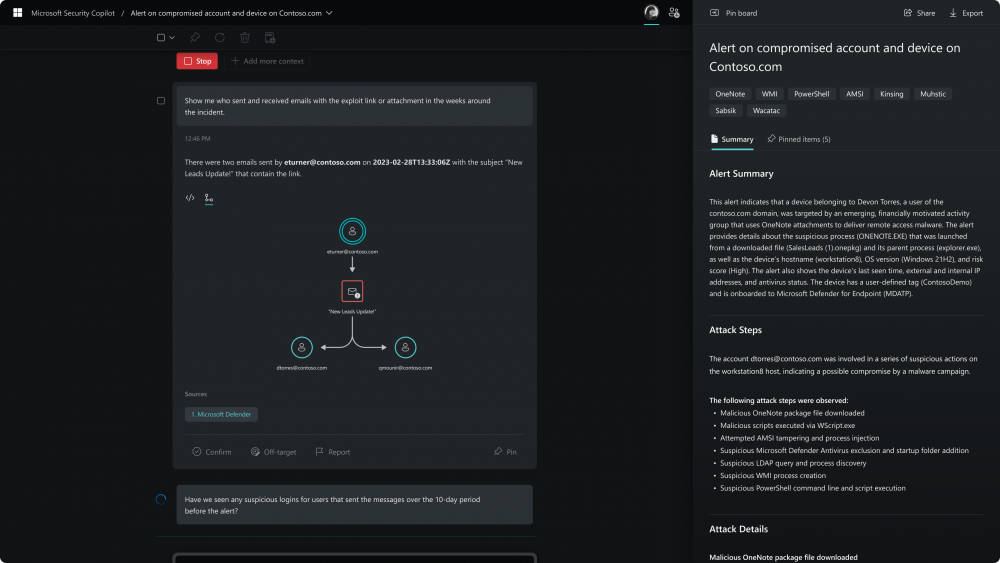

Copilot takes advantage of the newest technology based-on OpenAI LP’s GPT-4 to allow cybersecurity professionals to ask questions and get answers about current security issues affecting their environment. It can even incorporate knowledge directly from within the company to return information that can be used by the team, learn from existing intelligence, correlate current threat activity from other tool data, and deliver up-to-date information.

“Today the odds remain stacked against cybersecurity professionals,” said Vasu Jakkal, corporate vice president of Microsoft Security. “Too often, they fight an asymmetric battle against relentless and sophisticated attackers.”

To make the product work, Microsoft combined the power of OpenAI’s large language model, so that it can understand the questions being asked as well as summarize threat reports being generated by a company’s cybersecurity team and external data, with Microsoft’s own threat analysis footprints. Microsoft’s model is informed by more than 100 different data sources and receives more than 65 trillion threat signals every day, the company said.

With Security Copilot, professionals can go to the AI assistant and quickly begin investigation of critical incidents by diving into data using natural language. It can use its ability to understand conversational language to summarize processes and events to provide quick reports to bring team members up to speed faster.

Examples of how it could be used include prompting the assistant to provide a report on an incident response report for a particular ongoing investigation based on a set of tools and events. The AI assistant can draw data and information from those tools and deliver a summary, report and other information up for users based on the query given and display it. Users then can refine their prompt to get the AI to provide more information, change how it displays or summarizes the report, what tools it reaches out to and what incident information it draws from.

Jakkal also said the assistant can anticipate and discover potential threats that professionals might miss by using Microsoft’s own global threat intelligence, thus hunting down anomalies and surfacing potential issues. They could also use it to extend their own skills, such as reverse-engineering a potentially malicious script and sending the information along to another colleague who could see if it needs to be tagged for further study.

Although this seems like an extremely useful tool for security professionals, it isn’t without its flaws. With all of its Copilot tools that integrate GPT-4, Microsoft has been quick to warn that it doesn’t “always get everything right,” and that warning is included with Security Copilot. In order to deal with this, Microsoft has added the ability for users to report an AI-generated response as off-target or incorrect so that it can be better tuned.

However, that might not be the greatest thing for a cybersecurity product. “Security Copilot requires that users check the accuracy of the outputs, without giving users any scores as to the likelihood that an output is correct or not,” said Avivah Litan, distinguished vice president analyst at Gartner Inc. “There’s an added danger that users will rely on the outputs and assume they are correct, which is an unsafe approach given we are talking about enterprise security.”

In Microsoft’s demo the AI’s error was benign — a reference to Windows 9, which doesn’t exist — but in a real-world investigation, an AI could make a mistake that could be more difficult to catch by a security professional and that could get passed along. “The market has a long way to go before enterprises can comfortably use these services,” Litan said.

As for the security backing that Microsoft bakes into Security Copilot, the company has been on a spree of acquisitions to bolster its threat detection capabilities. These include the threat management firm RiskIQ Inc. and threat analysis company Miburo.

The AI assistant natively integrates with a number of different Microsoft security products, including its enterprise cloud-based cyberthreat management solution Sentinel and its anti-malware solution Defender. The software is currently available in private preview.

Image: Microsoft

Show your support for our mission by joining our Cube Club and Cube Event Community of experts. Join the community that includes Amazon Web Services and Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger and many more luminaries and experts.

[ad_2]

Source link