[ad_1]

The human brain is a mystery that’s being slowly unraveled, but many unanswered questions remain. One of the big ones is understanding how the brain translates visual information into mental images as processed by our occipital and temporal lobes. And as with so many areas in science nowadays—both biological and technological—AI can help with that.

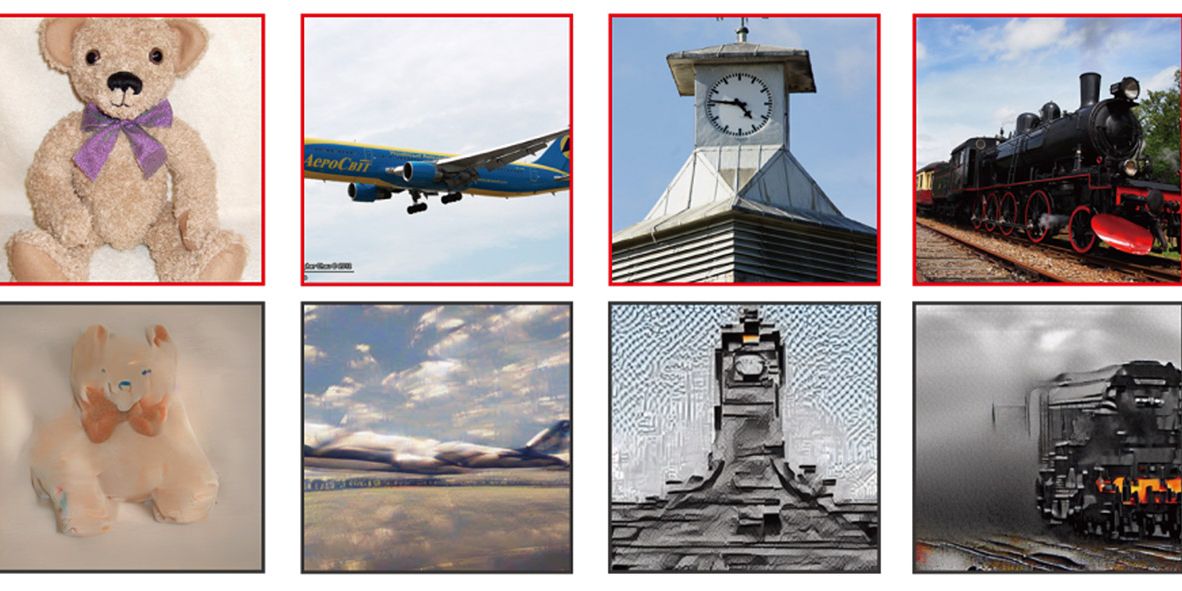

Using an AI engine called Stable Diffusion, which is similar to other text-to-image generative AIs like DALL-E 2 and Midjourney, scientists from Osaka University in Japan trained the algorithm on tens of thousands of brain scans from four individuals, scans that were originally gathered for an unrelated study. These images portrayed brain activity as the participants were looking at simple images, like a teddy bear, a train, or a clock tower.

The scientists plan to present their study at an upcoming computer vision conference, and published their findings on a preprint server in December 2022.

More From Popular Mechanics

When someone looks at an image, functional magnetic resonance imaging (fMRI) scans can detect changes in blood flow to regions of the brain. Here the temporal and occipital lobes work together to register information about the image itself as well as its visual layout, including things like perspective and scale. Once these readings are captured, they can be easily converted into an imitation image for training AI.

There was just one problem: when the AI analyzed a brain scan, it could roughly approximate scale and perspective — information gathered from the occipital lobe — but couldn’t discern exactly what the object was. The results were instead amorphous works of abstract art that resembled an objects’ overall position and perspective but were otherwise unrecognizable.

Although the AI would likely perform better with more information to draw from, scientists were limited by the data at hand. So to overcome this problem, the researchers incorporated keywords drawn from the data of each brain scan and leveraged Stable Diffusion’s text-to-image capabilities. With the help of these captions, the AI could now accurately combine the two data inputs — the visual fMRI scans and the textual captions — to recreate images remarkably similar to the ones seen by the original participants.

When a user normally inputs a word into an AI text-to-image generator, DALL-E, Midjourney, or any other algorithm of your choice creates an image with no regard to perspective, scale, or positioning. But because Stable Diffusion was also drawing on information captured by the brain’s occipital lobe, it created a stunningly close approximation of the specific image shown to participants. The researchers reserved a few images from the initial training pool in order to assess the algorithm — and the AI subsequently aced the test.

This impressive mind-reading ability comes with a few caveats—the biggest being that it’s only working off a pool of four people’s brain scans. The AI would need to be trained on much larger data sets before being deployed in any serious way. It could also only identify images it’s already been exposed to during training. If it were asked to recognize, say, a horse from someone’s brain scan, and it hadn’t been shown a brain scan of a horse before, it couldn’t recognize the image.

The paper’s co-author Shinji Nishimoto, a neuroscientist from Osaka University, hopes that one day the technology could help analyze human dreams and develop a greater understanding of animal perception more broadly. For now, the world’s best mind readers mind be the “minds” humans created themselves.

Darren lives in Portland, has a cat, and writes/edits about sci-fi and how our world works. You can find his previous stuff at Gizmodo and Paste if you look hard enough.

[ad_2]

Source link